Operating a cluster

With some exceptions, you can operate a cluster in much the same way as you operate a standalone FortiGate unit. This chapter describes those exceptions and also the similarities involved in operating a cluster instead of a standalone FortiGate unit.

Operating a cluster

The configurations of all of the FortiGate units in a cluster are synchronized so that the cluster units can simulate a single FortiGate unit. Because of this synchronization, you manage the HA cluster instead of managing the individual cluster units. You manage the cluster by connecting to the web-based manager using any cluster interface configured for HTTPS or HTTP administrative access. You can also manage the cluster by connecting to the CLI using any cluster interface configured for SSH or telnet administrative access.

The cluster web-based manager dashboard displays the cluster name, the host name and serial number of each cluster member, and also shows the role of each unit in the cluster. The roles can be master (primary unit) and slave (subordinate units). The dashboard also displays a cluster unit front panel illustration.

You can also go to System > Config > HA to view the cluster members list. This includes status information for each cluster unit. You can also use the cluster members list for a number of cluster management functions including changing the HA configuration of an operating cluster, changing the host name and device priority of a subordinate unit, and disconnecting a cluster unit from a cluster. See Cluster members list.

You can use log messages to view information about the status of the cluster. SeeClusters and logging. You can use SNMP to manage the cluster by configuring a cluster interface for SNMP administrative access. Using an SNMP manager you can get cluster configuration information and receive traps.

You can configure a reserved management interface to manage individual cluster units. You can use this interface to access the web-based manager or CLI and to configure SNMP management for individual cluster units. See Managing individual cluster units using a reserved management interface.

You can manage individual cluster units by using SSH, telnet, or the CLI console on the web-based manager dashboard to connect to the CLI of the cluster. From the CLI you can use the execute ha manage command to connect to the CLI of any unit in the cluster.

You can also manage individual cluster units by using a null-modem cable to connect to any cluster unit CLI. From there you can use the execute ha manage command to connect to the CLI of each unit in the cluster.

Operating a virtual cluster

Managing a virtual cluster is very similar to managing a cluster that does not contain multiple virtual domains. Most of the information in this chapter applies to managing both kinds of clusters. This section describes what is different when managing a virtual cluster.

If virtual domains are enabled, the cluster web-based manager dashboard displays the cluster name and the role of each cluster unit in virtual cluster 1 and virtual cluster 2.

The configuration and maintenance options that you have when you connect to a virtual cluster web-based manager or CLI depend on the virtual domain that you connect to and the administrator account that you use to connect.

If you connect to a cluster as the administrator of a virtual domain, you connect directly to the virtual domain. Since HA virtual clustering is a global configuration, virtual domain administrators cannot see HA configuration options. However, virtual domain administrators see the host name of the cluster unit that they are connecting to on the web browser title bar or CLI prompt. This host name is the host name of the primary unit for the virtual domain. Also, when viewing log messages the virtual domain administrator can select to view log messages for either of the cluster units.

If you connect to a virtual cluster as the admin administrator you connect to the global web-based manager or CLI. Even so, you are connecting to an interface and to the virtual domain that the interface has been added to. The virtual domain that you connect to does not make a difference for most configuration and maintenance operations. However, there are a few exceptions. You connect to the FortiGate unit that functions as the primary unit for the virtual domain. So the host name displayed on the web browser title bar and on the CLI is the host name of this primary unit.

Managing individual cluster units using a reserved management interface

You can provide direct management access to all cluster units by reserving a management interface as part of the HA configuration. Once this management interface is reserved, you can configure a different IP address, administrative access and other interface settings for this interface for each cluster unit. Then by connecting this interface of each cluster unit to your network you can manage each cluster unit separately from a different IP address. Configuration changes to the reserved management interface are not synchronized to other cluster units.

The reserved management interface provides direct management access to each cluster unit and gives each cluster unit a different identity on your network. This simplifies using external services, such as SNMP, to separately monitor and manage each cluster unit.

|

|

The reserved management interface is not assigned an HA virtual MAC address like other cluster interfaces. Instead the reserved management interface retains the permanent hardware address of the physical interface unless you change it using the config system interface command. |

The reserved management interface and IP address should not be used for managing a cluster using FortiManager. To correctly manage a FortiGate HA cluster with FortiManager use the IP address of one of the cluster unit interfaces.

If you enable SNMP administrative access for the reserved management interface you can use SNMP to monitor each cluster unit using the reserved management interface IP address. To monitor each cluster unit using SNMP, just add the IP address of each cluster unit’s reserved management interface to the SNMP server configuration. You must also enable direct management of cluster members in the cluster SNMP configuration.

If you enable HTTPS or HTTP administrative access for the reserved management interfaces you can connect to the web-based manager of each cluster unit. Any configuration changes made to any of the cluster units is automatically synchronized to all cluster units. From the subordinate units the web-based manager has the same features as the primary unit except that unit-specific information is displayed for the subordinate unit, for example:

- The Dashboard System Information widget displays the subordinate unit serial number but also displays the same information about the cluster as the primary unit

- On the Cluster members list (go to System > Config > HA) you can change the HA configuration of the subordinate unit that you are logged into. For the primary unit and other subordinate units you can change only the host name and device priority.

- Log Access displays the logs of the subordinate that you are logged into fist, You use the HA Cluster list to view the log messages of other cluster units including the primary unit.

If you enable SSH or TELNET administrative access for the reserved management interfaces you can connect to the CLI of each cluster unit. The CLI prompt contains the host name of the cluster unit that you have connected to. Any configuration changes made to any of the cluster units is automatically synchronized to all cluster units. You can also use the execute ha manage command to connect to other cluster unit CLIs.

The reserved management interface is available in NAT/Route and in Transparent mode. It is also available if the cluster is operating with multiple VDOMs. In Transparent mode you cannot normally add an IP address to an interface. However, you can add an IP address to the reserved management interface.

Configuring the reserved management interface and SNMP remote management of individual cluster units

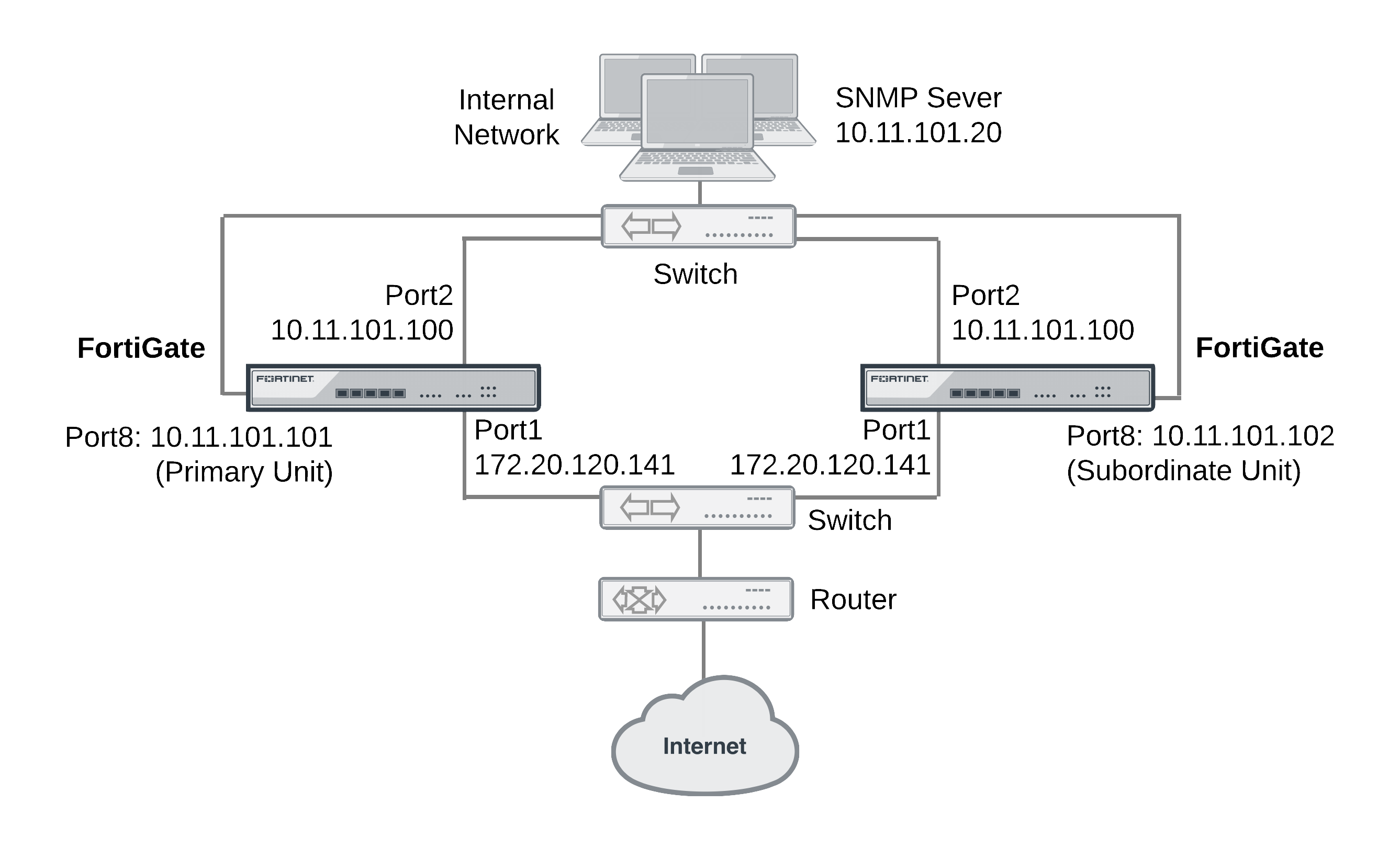

This example describes how to configure SNMP remote management of individual cluster units using the HA reserved management interface. The configuration consists of two FortiGate-620B units already operating as a cluster. In the example, the port8 interface of each cluster unit is connected to the internal network using the switch and configured as the reserved management interface.

SNMP remote management of individual cluster units

To configure the reserved management interface - web-based manager

- Go to System > Config > HA.

- Edit the primary unit.

- Select Reserve Management Port for Cluster Member and select port8.

- Select OK.

To configure the reserved management interface - CLI

From the CLI you can also configure IPv4 and IPv6 default routes that are only used by the reserved management interface.

- Log into the CLI of any cluster unit.

- Enter the following command to enable the reserved management interface, set port8 as the reserved interface, and add an IPv4 default route of 10.11.101.2 and an IPv6 default route of 2001:db8:0:2::20 for the reserved management interface.

config system ha

set ha-mgmt-status enable

set ha-mgmt-interface port8

set ha-mgmt-interface-gateway 10.11.101.2

set ha-mgmt-interface-gateway6 2001:db8:0:2::20

end

The reserved management interface default route is not synchronized to other cluster units.

To change the primary unit reserved management interface configuration - web-based manager

You can change the IP address of the primary unit reserved management interface from the primary unit web-based manager. Configuration changes to the reserved management interface are not synchronized to other cluster units.

- From a PC on the internal network, browse to http://10.11.101.100 and log into the cluster web-based manager.

This logs you into the primary unit web-based manager.

You can identify the primary unit from its serial number or host name that appears on the System Information dashboard widget.

- Go to System > Network > Interfaces and edit the port8 interface as follows:

| Alias | primary_reserved |

| IP/Netmask | 10.11.101.101/24 |

| Administrative Access | Ping, SSH, HTTPS, SNMP |

- Select OK.

You can now log into the primary unit web-based manager by browsing to https://10.11.101.101. You can also log into this primary unit CLI by using an SSH client to connect to 10.11.101.101.

To change subordinate unit reserved management interface configuration - CLI

At this point you cannot connect to the subordinate unit reserved management interface because it does not have an IP address. Instead, this procedure describes connecting to the primary unit CLI and using the execute ha manage command to connect to subordinate unit CLI to change the port8 interface. You can also use a serial connection to the cluster unit CLI. Configuration changes to the reserved management interface are not synchronized to other cluster units.

- Connect to the primary unit CLI and use the

execute ha managecommand to connect to a subordinate unit CLI.

You can identify the subordinate unit from is serial number or host name. The host name appears in the CLI prompt.

- Enter the following command to change the port8 IP address to 10.11.101.102 and set management access to HTTPS, ping, SSH, and SNMP.

config system interface

edit port8

set ip 10.11.101.102/24

set allowaccess https ping ssh snmp

end

You can now log into the subordinate unit web-based manager by browsing to https://10.11.101.102. You can also log into this subordinate unit CLI by using an SSH client to connect to 10.11.101.102.

To configure the cluster for SNMP management using the reserved management interfaces - CLI

This procedure describes how to configure the cluster to allow the SNMP server to get status information from the primary unit and the subordinate unit. The SNMP configuration is synchronized to all cluster units. To support using the reserved management interfaces, you must add at least one HA direct management host to an SNMP community. If your SNMP configuration includes SNMP users with user names and passwords you must also enable HA direct management for SNMP users.

- Enter the following command to add an SNMP community called

Communityand add a host to the community for the reserved management interface of each cluster unit. The host includes the IP address of the SNMP server (10.11.101.20).

config system snmp community

edit 1

set name Community

config hosts

edit 1

set ha-direct enable

set ip 10.11.101.20

end

end

|

|

Enabling ha-direct in non-HA environments makes SNMP unusable. |

- Enter the following command to add an SNMP user for the reserved management interface.

config system snmp user

edit 1

set ha-direct enable

set notify-hosts 10.11.101.20

end

Configure other settings as required.

To get CPU, memory, and network usage of each cluster unit using the reserved management IP addresses

From the command line of an SNMP manager, you can use the following SNMP commands to get CPU, memory and network usage information for each cluster unit. In the examples, the community name is Community. The commands use the MIB field names and OIDs listed below.

Enter the following commands to get CPU, memory and network usage information for the primary unit with reserved management IP address 10.11.101.101 using the MIB fields:

snmpget -v2c -c Community 10.11.101.101 fgHaStatsCpuUsage

snmpget -v2c -c Community 10.11.101.101 fgHaStatsMemUsage

snmpget -v2c -c Community 10.11.101.101 fgHaStatsNetUsage

Enter the following commands to get CPU, memory and network usage information for the primary unit with reserved management IP address 10.11.101.101 using the OIDs:

snmpget -v2c -c Community 10.11.101.101 1.3.6.1.4.1.12356.101.13.2.1.1.3.1

snmpget -v2c -c Community 10.11.101.101 1.3.6.1.4.1.12356.101.13.2.1.1.4.1

snmpget -v2c -c Community 10.11.101.101 1.3.6.1.4.1.12356.101.13.2.1.1.5.1

Enter the following commands to get CPU, memory and network usage information for the subordinate unit with reserved management IP address 10.11.101.102 using the MIB fields:

snmpget -v2c -c Community 10.11.101.102 fgHaStatsCpuUsage

snmpget -v2c -c Community 10.11.101.102 fgHaStatsMemUsage

snmpget -v2c -c Community 10.11.101.102 fgHaStatsNetUsage

Enter the following commands to get CPU, memory and network usage information for the subordinate unit with reserved management IP address 10.11.101.102 using the OIDs:

snmpget -v2c -c Community 10.11.101.102 1.3.6.1.4.1.12356.101.13.2.1.1.3.1

snmpget -v2c -c Community 10.11.101.102 1.3.6.1.4.1.12356.101.13.2.1.1.4.1

snmpget -v2c -c Community 10.11.101.102 1.3.6.1.4.1.12356.101.13.2.1.1.5.1

Managing individual cluster units in a virtual cluster

You can select the HA option Do NOT Synchronize Management VDOM Configuration if you have enabled multiple VDOMS and set a VDOM other than the root VDOM to be the management VDOM. You can select this option to prevent the management VDOM configuration from being synchronized between cluster units in a virtual cluster. This allows you to add an interface to the VDOM in each cluster unit and then to give the interfaces different IP addresses in each cluster unit, allowing you to manage each cluster unit separately.

You can also enable this feature using the following command:

config system ha

set standalone-mgmt-vdom enable

end

|

|

This feature must be disabled to manage a cluster using FortiManager. |

The primary unit acts as a router for subordinate unit management traffic

HA uses routing and inter-VDOM links to route subordinate unit management traffic through the primary unit to the network. Similar to a standalone FortiGate unit, subordinate units may generate their own management traffic, including:

- DNS queries.

- FortiGuard Web Filtering rating requests.

- Log messages to be sent to a FortiAnalyzer unit, to a syslog server, or to the FortiGuard Analysis and Management Service.

- Log file uploads to a FortiAnalyzer unit.

- Quarantine file uploads to a FortiAnalyzer unit.

- SNMP traps.

- Communication with remote authentication servers (RADIUS, LDAP, TACACS+ and so on)

Subordinate units send this management traffic over the HA heartbeat link to the primary unit. The primary unit forwards the management traffic to its destination. The primary unit also routes replies back to the subordinate unit in the same way.

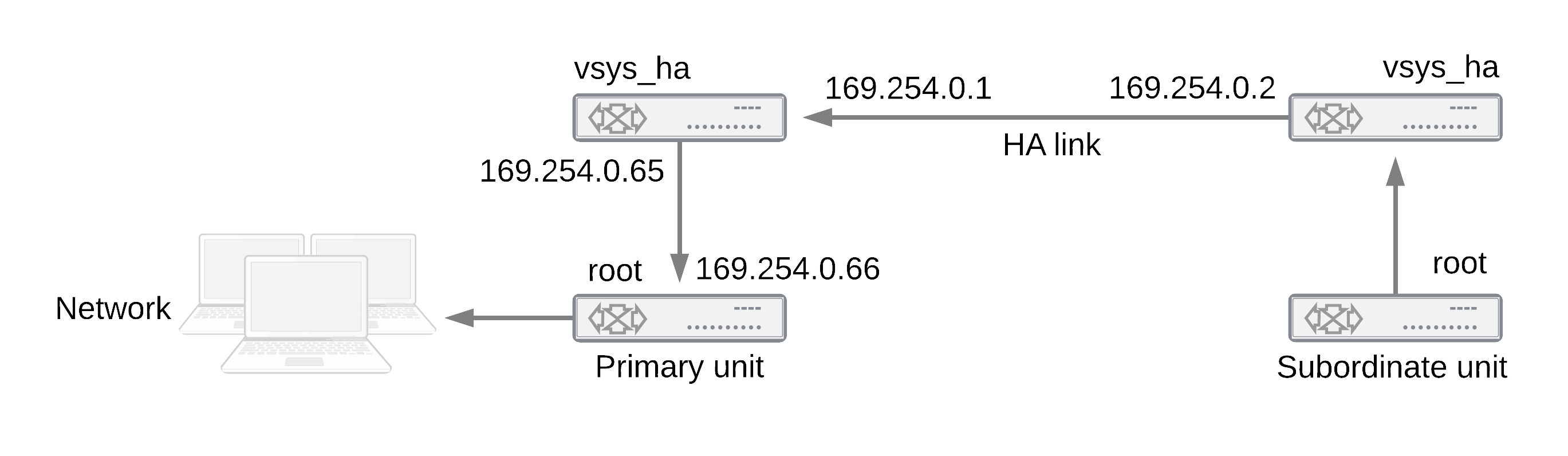

HA uses a hidden VDOM called vsys_ha for HA operations. The vsys_ha VDOM includes the HA heartbeat interfaces, and all communication over the HA heartbeat link goes through the vsys_ha VDOM. To provide communication from a subordinate unit to the network, HA adds hidden inter‑VDOM links between the primary unit management VDOM and the primary unit vsys_ha VDOM. By default, root is the management VDOM.

Management traffic from the subordinate unit originates in the subordinate unit vsys_ha VDOM. The vsys_ha VDOM routes the management traffic over the HA heartbeat link to the primary unit vsys_ha VDOM. This management traffic is then routed to the primary unit management VDOM and from there out onto the network.

DNS queries and FortiGuard Web Filtering and Email Filter requests are still handled by the HA proxy so the primary unit and subordinate units share the same DNS query cache and the same FortiGuard Web Filtering and Email Filter cache. In a virtual clustering configuration, the cluster unit that is the primary unit for the management virtual domain maintains the FortiGuard Web Filtering, Email Filtering, and DNS query cache.

Subordinate unit management traffic path

Cluster communication with RADIUS and LDAP servers

In an active-passive cluster, only the primary unit processes traffic, so the primary unit communicates with RADIUS or LDAP servers. In a cluster that is operating in active-active mode, subordinate units send RADIUS and LDAP requests to the primary unit over the HA heartbeat link and the primary units routes them to their destination. The primary unit relays the responses back to the subordinate unit.

Clusters and FortiGuard services

This section describes how various FortiGate HA clustering configurations communicate with the FDN.

In an operating cluster, the primary unit communicates directly with the FortiGuard Distribution Network (FDN). Subordinate units also communicate directly with the FDN but as described above, all communication between subordinate units and the FDN is routed through the primary unit.

You must register and license all of the units in a cluster for all required FortiGuard services, both because all cluster units communicate with the FDN and because any cluster unit could potentially become the primary unit.

FortiGuard and active-passive clusters

For an active-passive cluster, only the primary unit processes traffic. Even so, all cluster units communicate with the FDN. Only the primary unit sends FortiGuard Web Filtering and Antispam requests to the FDN. All cluster units receive FortiGuard Antivirus, IPS, and application control updates from the FDN.

In an active-passive cluster the FortiGuard Web Filter and Email Filter caches are located on the primary unit in the same way as for a standalone FortiGate unit. The caches are not shared among cluster units so after a failover the new primary unit must build up new caches.

In an active-passive cluster all cluster units also communicate with the FortiGuard Analysis and Management Service (FAMS).

FortiGuard and active-active clusters

For an active-active cluster, both the primary unit and the subordinate units process traffic. Communication between the cluster units and the FDN is the same as for active-passive clusters with the following exception.

Because the subordinate units process traffic, they may also be making FortiGuard Web Filtering and Email Filter requests. The primary unit receives all such requests from the subordinate units and relays them to the FDN and then relays the FDN responses back to the subordinate units. The FortiGuard Web Filtering and Email Filtering URL caches are maintained on the primary unit. The primary unit caches are used for primary and subordinate unit requests.

FortiGuard and virtual clustering

For a virtual clustering configuration the management virtual domain of each cluster unit communicates with the FDN. The cluster unit that is the primary unit for the management virtual domain maintains the FortiGuard Web Filtering and Email Filtering caches. All FortiGuard Web Filtering and Email Filtering requests are proxied by the management VDOM of the cluster unit that is the primary unit for the management virtual domain.

Clusters and logging

This section describes the log messages that provide information about how HA is functioning, how to view and manage logs for each unit in a cluster, and provides some example log messages that are recorded during specific cluster events.

You configure logging for a cluster in the same way as you configuring logging for a standalone FortiGate unit. Log configuration changes made to the cluster are synchronized to all cluster units.

All cluster units record log messages separately to the individual cluster unit’s log disk, to the cluster unit’s system memory, or both. You can view and manage log messages for each cluster unit from the cluster web-based manager Log Access page.

When remote logging is configured, all cluster units send log messages to remote FortiAnalyzer units or other remote servers as configured. HA uses routing and inter-VDOM links to route subordinate unit log traffic through the primary unit to the network.

When you configure a FortiAnalyzer unit to receive log messages from a FortiGate cluster, you should add a cluster to the FortiAnalyzer unit configuration so that the FortiAnalyzer unit can receive log messages from all cluster units.

Viewing and managing log messages for individual cluster units

This section describes how to view and manage log messages for an individual cluster unit.

To view HA cluster log messages

- Log into the cluster web-based manager.

- Go to Log&Report > Log Config > Log Settings > GUI Preferences and select to display logs from Memory, Disk or FortiAnalyzer.

For each log display, the HA Cluster list displays the serial number of the cluster unit for which log messages are displayed. The serial numbers are displayed in order in the list.

- Set HA Cluster to the serial number of one of the cluster units to display log messages for that unit.

About HA event log messages

HA event log messages always include the host name and serial number of the cluster unit that recorded the message. HA event log messages also include the HA state of the unit and also indicate when a cluster unit switches (or moves) from one HA state to another. Cluster units can operate in the HA states listed below:

HA states

| Hello | A FortiGate unit configured for HA operation has started up and is looking for other FortiGate units with which to form a cluster. |

| Work | In an active-passive cluster a cluster unit is operating as the primary unit. In an active-active cluster unit is operating as the primary unit or a subordinate unit. |

| Standby | In an active-passive cluster the cluster unit is operating as a subordinate unit. |

HA log Event log messages also indicate the virtual cluster that the cluster unit is operating in as well as the member number of the unit in the cluster. If virtual domains are not enabled, all clusters unit are always operating in virtual cluster 1. If virtual domains are enabled, a cluster unit may be operating in virtual cluster 1 or virtual cluster 2. The member number indicates the position of the cluster unit in the cluster members list. Member 0 is the primary unit. Member 1 is the first subordinate unit, member 2 is the second subordinate unit, and so on.

HA log messages

See the FortiOS log message reference for a listing of and descriptions of the HA log messages.

FortiGate HA message "HA master heartbeat interface <intf_name> lost neighbor information"

The following HA log messages may be recorded by an operating cluster:

2009-02-16 11:06:34 device_id=FG2001111111 log_id=0105035001 type=event subtype=ha pri=critical vd=root msg="HA slave heartbeat interface internal lost neighbor information"

2009-02-16 11:06:40 device_id=FG2001111111 log_id=0105035001 type=event subtype=ha pri=notice vd=root msg="Virtual cluster 1 of group 0 detected new joined HA member"

2009-02-16 11:06:40 device_id=FG2001111111 log_id=0105035001 type=event subtype=ha pri=notice vd=root msg="HA master heartbeat interface internal get peer information"

These log messages indicate that the cluster units could not connect to each other over the HA heartbeat link for the period of time that is given by hb-interval x hb-lost-threshold, which is 1.2 seconds with the default values.

To diagnose this problem

- Check all heartbeat interface connections including cables and switches to make sure they are connected and operating normally.

- Use the following commands to display the status of the heartbeat interfaces.

get hardware nic <heartbeat_interface_name>

diagnose hardware deviceinfo nic <heartbeat_interface_name>

The status information may indicate the interface status and link status and also indicate if a large number of errors have been detected.

- If the log message only appears during peak traffic times, increase the tolerance for missed HA heartbeat packets by using the following commands to increase the lost heartbeat threshold and heartbeat interval:

config system ha

set hb-lost-threshold 12

set hb-interval 4

end

These settings multiply by 4 the loss detection interval. You can use higher values as well.

This condition can also occur if the cluster units are located in different buildings or even different geographical locations. Called a distributed cluster, as a result of the separation it may take a relatively long time for heartbeat packets to be transmitted between cluster units. You can support a distributed cluster by increasing the heartbeat interval so that the cluster expects extra time between heartbeat packets.

- Optionally disable session-pickup to reduce the processing load on the heartbeat interfaces.

- Instead of disabling session-pickup you can enable

session-pickup-delayto reduce the number of sessions that are synchronized. With this option enabled only sessions that are active for more than 30 seconds are synchronized.

It may be useful to monitor CPU and memory usage to check for low memory and high CPU usage. You can configure event logging to monitor CPU and memory usage. You can also enable the CPU over usage and memory low SNMP events.

Once this monitoring is in place, try and determine if there have been any changes in the network or an increase of traffic recently that could be the cause. Check to see if the problem happens frequently and if so what the pattern is.

To monitor the CPU of the cluster units and troubleshoot further, use the following procedure and commands:

get system performance status

get system performance top 2

diagnose sys top 2

These commands repeated at frequent intervals will show the activity of the CPU and the number of sessions.

Search the Fortinet Knowledge Base for articles about monitoring CPU and Memory usage.

If the problem persists, gather the following information (a console connection might be necessary if connectivity is lost) and provide it to Technical Support when opening a ticket:

- Debug log from the web-based manager: System > Config > Advanced > Download Debug Log

- CLI command output:

diagnose sys top 2 (keep it running for 20 seconds)

get system performance status (repeat this command multiple times to get good samples)

get system ha status

diagnose sys ha status

diagnose sys ha dump-by {all options}

diagnose netlink device list

diagnose hardware deviceinfo nic <heartbeat-interface-name>

execute log filter category 1

execute log display

Formatting cluster unit hard disks (log disks)

If you need to format the hard disk (also called log disk or disk storage) of one or more cluster units you should disconnect the unit from the cluster and use the execute formatlogdisk command to format the cluster unit hard disk then add the unit back to the cluster.

For information about how to remove a unit from a cluster and add it back, see Disconnecting a cluster unit from a cluster and Adding a disconnected FortiGate unit back to its cluster .

Once you add the cluster unit with the formatted log disk back to the cluster you should make it the primary unit before removing other units from the cluster to format their log disks and then add them back to the cluster.

Clusters and SNMP

You can use SNMP to manage a cluster by configuring a cluster interface for SNMP administrative access. Using an SNMP manager you can get cluster configuration and status information and receive traps.

You configure SNMP for a cluster in the same way as configuring SNMP for a standalone FortiGate unit. SNMP configuration changes made to the cluster are shared by all cluster units.

Each cluster unit sends its own traps and SNMP manager systems can use SNMP get commands to query each cluster unit separately. To set SNMP get queries to each cluster unit you must create a special get command that includes the serial number of the cluster unit.

Alternatively you can use the HA reserved management interface feature to give each cluster unit a different management IP address. Then you can create an SNMP get command for each cluster unit that just includes the management IP address and does not have to include the serial number.

SNMP get command syntax for the primary unit

Normally, to get configuration and status information for a standalone FortiGate unit or for a primary unit, an SNMP manager would use an SNMP get commands to get the information in a MIB field. The SNMP get command syntax would be similar to the following:

snmpget -v2c -c <community_name> <address_ipv4> {<OID> | <MIB_field>}

where:

<community_name> is an SNMP community name added to the FortiGate configuration. You can add more than one community name to a FortiGate SNMP configuration. The most commonly used community name is public.

<address_ipv4> is the IP address of the FortiGate interface that the SNMP manager connects to.

{<OID> | <MIB_field>} is the object identifier (OID) for the MIB field or the MIB field name itself. The HA MIB fields and OIDs are listed below:

SNMP field names and OIDs

| MIB field | OID | Description |

|---|---|---|

| fgHaSystemMode | .1.3.6.1.4.1.12356.101.13.1.1.0 | HA mode (standalone, a-a, or a-p) |

| fgHaGroupId | .1.3.6.1.4.1.12356.101.13.1.2.0 | The HA priority of the cluster unit. Default 128. |

| fgHaPriority | .1.3.6.1.4.1.12356.101.13.1.3.0 | The HA priority of the cluster unit. Default 128. |

| fgHaOverride | .1.3.6.1.4.1.12356.101.13.1.4.0 | Whether HA override is disabled or enabled for the cluster unit. |

| fgHaAutoSync | .1.3.6.1.4.1.12356.101.13.1.5.0 | Whether automatic HA synchronization is disabled or enabled. |

| fgHaSchedule | .1.3.6.1.4.1.12356.101.13.1.6.0 | The HA load balancing schedule. Set to none unless operating in a-p mode. |

| fgHaGroupName | .1.3.6.1.4.1.12356.101.13.1.7.0 | The HA group name. |

| fgHaStatsIndex | .1.3.6.1.4.1.12356.101.13.2.1.1.1.1 | The cluster index of the cluster unit. 1 for the primary unit, 2 to x for the subordinate units. |

| fgHaStatsSerial | .1.3.6.1.4.1.12356.101.13.2.1.1.2.1 | The serial number of the cluster unit. |

| fgHaStatsCpuUsage | .1.3.6.1.4.1.12356.101.13.2.1.1.3.1 | The cluster unit’s current CPU usage. |

| fgHaStatsMemUsage | .1.3.6.1.4.1.12356.101.13.2.1.1.4.1 | The cluster unit’s current Memory usage. |

| fgHaStatsNetUsage | .1.3.6.1.4.1.12356.101.13.2.1.1.5.1 | The cluster unit’s current Network bandwidth usage. |

| fgHaStatsSesCount | .1.3.6.1.4.1.12356.101.13.2.1.1.6.1 | The cluster unit’s current session count. |

| fgHaStatsPktCount | .1.3.6.1.4.1.12356.101.13.2.1.1.7.1 | The cluster unit’s current packet count. |

| fgHaStatsByteCount | .1.3.6.1.4.1.12356.101.13.2.1.1.8.1 | The cluster unit’s current byte count. |

| fgHaStatsIdsCount | .1.3.6.1.4.1.12356.101.13.2.1.1.9.1 | The number of attacks reported by the IPS for the cluster unit. |

| fgHaStatsAvCount | .1.3.6.1.4.1.12356.101.13.2.1.1.10.1 | The number of viruses reported by the antivirus system for the cluster unit. |

| fgHaStatsHostname | .1.3.6.1.4.1.12356.101.13.2.1.1.11.1 | The hostname of the cluster unit. |

To get the HA priority for the primary unit

The following SNMP get command gets the HA priority for the primary unit. The community name is public. The IP address of the cluster interface configured for SNMP management access is 10.10.10.1. The HA priority MIB field is fgHaPriority and the OID for this MIB field is 1.3.6.1.4.1.12356.101.13.1.3.0 The first command uses the MIB field name and the second uses the OID:

snmpget -v2c -c public 10.10.10.1 fgHaPriority

snmpget -v2c -c public 10.10.10.1 1.3.6.1.4.1.12356.101.13.1.3.0

SNMP get command syntax for any cluster unit

To get configuration status information for a specific cluster unit (for the primary unit or for any subordinate unit), the SNMP manager must add the serial number of the cluster unit to the SNMP get command after the community name. The community name and the serial number are separated with a dash. The syntax for this SNMP get command would be:

snmpget -v2c -c <community_name>-<fgt_serial> <address_ipv4> {<OID> | <MIB_field>}

where:

<community_name> is an SNMP community name added to the FortiGate configuration. You can add more than one community name to a FortiGate SNMP configuration. All units in the cluster have the same community name. The most commonly used community name is public.

<fgt_serial> is the serial number of any cluster unit. For example, FGT4002803033172. You can specify the serial number of any cluster unit, including the primary unit, to get information for that unit.

<address_ipv4> is the IP address of the FortiGate interface that the SNMP manager connects to.

{<OID> | <MIB_field>} is the object identifier (OID) for the MIB field or the MIB field name itself.

If the serial number matches the serial number of a subordinate unit, the SNMP get request is sent over the HA heartbeat link to the subordinate unit. After processing the request, the subordinate unit sends the reply back over the HA heartbeat link back to the primary unit. The primary unit then forwards the response back to the SNMP manager.

If the serial number matches the serial number of the primary unit, the SNMP get request is processed by the primary unit. You can actually add a serial number to the community name of any SNMP get request. But normally you only need to do this for getting information from a subordinate unit.

To get the CPU usage for a subordinate unit

The following SNMP get command gets the CPU usage for a subordinate unit in a FortiGate-5001SX cluster. The subordinate unit has serial number FG50012205400050. The community name is public. The IP address of the FortiGate interface is 10.10.10.1. The HA status table MIB field is fgHaStatsCpuUsage and the OID for this MIB field is 1.3.6.1.4.1.12356.101.13.2.1.1.3.1. The first command uses the MIB field name and the second uses the OID for this table:

snmpget -v2c -c public-FG50012205400050 10.10.10.1 fgHaStatsCpuUsage

snmpget -v2c -c public-FG50012205400050 10.10.10.1 1.3.6.1.4.1.12356.101.13.2.1.1.3.1

FortiGate SNMP recognizes the community name with syntax <community_name>-<fgt_serial>. When the primary unit receives an SNMP get request that includes the community name followed by serial number, the FGCP extracts the serial number from the request. Then the primary unit redirects the SNMP get request to the cluster unit with that serial number. If the serial number matches the serial number of the primary unit, the SNMP get is processed by the primary unit.

Getting serial numbers of cluster units

The following SNMP get commands use the MIB field name fgHaStatsSerial.<index> to get the serial number of each cluster unit. Where <index> is the cluster unit’s cluster index and 1 is the cluster index of the primary unit, 2 is the cluster index of the first subordinate unit, and 3 is the cluster index of the second subordinate unit.

The OID for this MIB field is 1.3.6.1.4.1.12356.101.13.2.1.1.2.1. The community name is public. The IP address of the FortiGate interface is 10.10.10.1.

The first command uses the MIB field name and the second uses the OID for this table and gets the serial number of the primary unit:

snmpget -v2c -c public 10.10.10.1 fgHaStatsSerial.1

snmpget -v2c -c public 10.10.10.1 1.3.6.1.4.1.12356.101.13.2.1.1.2.1

The second command uses the MIB field name and the second uses the OID for this table and gets the serial number of the first subordinate unit:

snmpget -v2c -c public 10.10.10.1 fgHaStatsSerial.2

snmpget -v2c -c public 10.10.10.1 1.3.6.1.4.1.12356.101.13.2.2.2

SNMP get command syntax - reserved management interface enabled

To get configuration and status information for any cluster unit where you have enabled the HA reserved management interface feature and assigned IP addresses to the management interface of each cluster unit, an SNMP manager would use the following get command syntax:

snmpget -v2c -c <community_name> <mgmt_address_ipv4> {<OID> | <MIB_field>}

where:

<community_name> is an SNMP community name added to the FortiGate configuration. You can add more than one community names to a FortiGate SNMP configuration. The most commonly used community name is public.

<mgmt_address_ipv4> is the IP address of the FortiGate HA reserved management interface that the SNMP manager connects to.

{<OID> | <MIB_field>} is the object identifier (OID) for the MIB field or the MIB field name itself. To find OIDs and MIB field names see your FortiGate unit’s online help.

Adding FortiClient licenses to a cluster

Each FortiGate unit in a cluster must have its own FortiClient license. Contact your reseller to purchase FortiClient licenses for all of the FortiGate units in your cluster.

When you receive the license keys you can log into the Fortinet Support site and add the FortiClient license keys to each FortiGate unit. Then, as long as the cluster can connect to the Internet each cluster unit receives its FortiClient license key from the FortiGuard network.

Adding FortiClient licenses to cluster units with a reserved management interface

You can also use the following steps to manually add license keys to your cluster units from the web-based manager or CLI. Your cluster must be connected to the Internet and you must have configured a reserved management interface for each cluster unit.

- Log into the -web-based manager of each cluster unit using its reserved management interface IP address.

- Go to the License Information dashboard widget and beside FortiClient select Enter License.

- Enter the license key and select OK.

- Confirm that the license has been installed and the correct number of FortiClients are licensed.

- Repeat for all of the cluster units.

You can also use the reserved management IP address to log into each cluster unit CLI and use following command to add the license key:

execute FortiClient-NAC update-registration-license <license-key>

You can connect to the CLIs of each cluster unit using their reserved management IP address.

Adding FortiClient licenses to cluster units with no reserved management interface

If you have not set up reserved management IP addresses for your cluster units, you can still add FortiClient license keys to each cluster unit. You must log into the primary unit and then use the execute ha manage command to connect to each cluster unit CLI. For example, use the following steps to add a FortiClient license key a cluster of three FortiGate units:

- Log into the primary unit CLI and enter the following command to confirm the serial number of the primary unit:

get system status

- Add the FortiClient license key for that serial number to the primary unit:

execute FortiClient-NAC update-registration-license <license-key>

You can also use the web-based manager to add the license key to the primary unit.

- Enter the following command to log into the first subordinate unit:

execute ha manage 1

- Enter the following command to confirm the serial number of the cluster unit that you have logged into:

get system status

- Add the FortiClient license key for that serial number to the cluster unit:

execute FortiClient-NAC update-registration-license <license-key>

- Enter the following command to log into the second subordinate unit:

execute ha manage 2

- Enter the following command to confirm the serial number of the cluster unit that you have logged into:

get system status

- Add the FortiClient license key for that serial number to the cluster unit:

execute FortiClient-NAC update-registration-license <license-key>

Viewing FortiClient license status and active FortiClient users for each cluster unit

To view FortiClient license status and FortiClient information for each cluster unit you must log into each cluster unit’s web-based manager or CLI. You can do this by connecting to each cluster unit’s reserved management interface if they are configured. If you have not configured reserved management interfaces you can use the execute ha manage command to log into each cluster unit CLI.

From the web-based manager, view FortiClient License status from the License Information dashboard widget and select Details to display the list of active FortiClient users connecting through that cluster unit. You can also see active FortiClient users by going to User & Device > Monitor > FortiClient.

From the CLI you can use the execute FortiClient {list | info} command to display FortiClient license status and active FortiClient users.

For example, use the following command to display the FortiClient license status of the cluster unit that you are logged into:

execute forticlient info

Maximum FortiClient connections: unlimited.

Licensed connections: 114

NAC: 114

WANOPT: 0

Test: 0

Other connections:

IPsec: 0

SSLVPN: 0

Use the following command to display the list of active FortiClient users connecting through the cluster unit. The output shows the time the connection was established, the type of FortiClient connection, the name of the device, the user name of the person connecting, the FortiClient ID, the host operating system, and the source IP address of the session.

execute forticlient list

TIMESTAMP TYPE CONNECT-NAME USER CLIENT-ID HOST-OS SRC-IP

20141017 09:13:33 NAC Gordon-PC Gordon 11F76E902611484A942E31439E428C5C Microsoft Windows 7 , 64-bit Service Pack 1 (build 7601) 172.20.120.10

20141017 09:11:55 NAC Gordon-PC 11F76E902611484A942E31439E428C5C Microsoft Windows 7 , 64-bit Service Pack 1 (build 7601) 172.20.120.10

20141017 07:27:11 NAC Desktop11 Richie 9451C0B8EE3740AEB7019E920BB3761B Microsoft Windows 7, 64-bit Service Pack 1 (build 7601) 172.20.120.20

Cluster members list

To display the cluster members list, go to System > Config > HA.

The cluster members list displays illustrations of the front panels of the cluster units. If the network jack for an interface is shaded green, the interface is connected. Hover the mouse pointer over each illustration to view the cluster unit host name, serial number, and how long the unit has been operating (up time). The list of monitored interfaces is also displayed.

From the cluster members list you can:

- View HA statistics.

- Use the up and down arrows to change the order in which cluster units are listed.

- See the host name of each cluster unit. To change the primary unit host name, go to the system dashboard and select Change beside the current host name in the System Information widget. To view and change a subordinate unit host name, from the cluster members list select the edit icon for a subordinate unit.

- View the status or role of each cluster unit.

- View and optionally change the HA configuration of the operating cluster.

- View and optionally change the host name and device priority of a subordinate unit.

- Disconnect a cluster unit from a cluster.

- Download the Debug log for any cluster unit. You can send this debug log file to Fortinet Technical Support to help diagnose problems with the cluster or with individual cluster units.

Virtual cluster members list

If virtual domains are enabled, you can display the cluster members list to view the status of the operating virtual clusters. The virtual cluster members list shows the status of both virtual clusters including the virtual domains added to each virtual cluster.

To display the virtual cluster members list for an operating cluster log in as the admin administrator, select Global Configuration and go to System > Config > HA.

The functions of the virtual cluster members list are the same as the functions of the Cluster Members list with the following exceptions.

- When you select the edit icon for a primary unit in a virtual cluster, you can change the virtual cluster 1 and virtual cluster 2 device priority of this cluster unit and you can edit the VDOM partitioning configuration of the cluster.

- When you select the edit icon for a subordinate unit in a virtual cluster, you can change the device priority for the subordinate unit for the selected virtual cluster.

Also, the HA cluster members list changes depending on the cluster unit that you connect to.

Viewing HA statistics

From the cluster members list you can select View HA statistics to display the serial number, status, and monitor information for each cluster unit. To view HA statistics, go to System > Config > HA and select View HA Statistics. Note the following about the HA statistics display:

- Use the serial number ID to identify each FortiGate unit in the cluster. The cluster ID matches the FortiGate unit serial number.

- Status indicates the status of each cluster unit. A green check mark indicates that the cluster unit is operating normally. A red X indicates that the cluster unit cannot communicate with the primary unit.

- The up time is the time in days, hours, minutes, and seconds since the cluster unit was last started.

- The web-based manager displays CPU usage for core processes only. CPU usage for management processes (for example, for HTTPS connections to the web-based manager) is excluded.

- The web-based manager displays memory usage for core processes only. Memory usage for management processes (for example, for HTTPS connections to the web-based manager) is excluded.

Changing the HA configuration of an operating cluster

To change the configuration settings of an operating cluster, go to System > Config > HA to display the cluster members list. Select Edit for the master (or primary) unit in the cluster members list to display the HA configuration page for the cluster.

You can use the HA configuration page to check and fine tune the configuration of the cluster after the cluster is up and running. For example, if you connect or disconnect cluster interfaces you may want to change the Port Monitor configuration.

Any changes you make on this page, with the exception of changes to the device priority, are first made to the primary unit configuration and then synchronized to the subordinate units. Changing the device priority only affects the primary unit.

Changing the HA configuration of an operating virtual cluster

To change the configuration settings of the primary unit in a functioning cluster with virtual domains enabled, log in as the admin administrator, select Global Configuration and go to System > Config > HA to display the cluster members list. Select Edit for the master (or primary) unit in virtual cluster 1 or virtual cluster 2 to display the HA configuration page for the virtual cluster.

You can use the virtual cluster HA configuration page to check and fine tune the configuration of both virtual clusters after the cluster is up and running. For example, you may want to change the Port Monitor configuration for virtual cluster 1 and virtual cluster 2 so that each virtual cluster monitors its own interfaces.

You can also use this configuration page to move virtual domains between virtual cluster 1 and virtual cluster 2. Usually you would distribute virtual domains between the two virtual clusters to balance the amount of traffic being processed by each virtual cluster.

Any changes you make on this page, with the exception of changes to the device priorities, are first made to the primary unit configuration and then synchronized to the subordinate unit.

You can also adjust device priorities to configure the role of this cluster unit in the virtual cluster. For example, to distribute traffic to both cluster units in the virtual cluster configuration, you would want one cluster unit to be the primary unit for virtual cluster 1 and the other cluster unit to be the primary unit for virtual cluster 2. You can create this configuration by setting the device priorities. The cluster unit with the highest device priority in virtual cluster 1 becomes the primary unit for virtual cluster 1. The cluster unit with the highest device priority in virtual cluster 2 becomes the primary unit in virtual cluster 2.

Changing the subordinate unit host name and device priority

To change the host name and device priority of a subordinate unit in an operating cluster, go to System > Config > HA to display the cluster members list. Select Edit for any slave (subordinate) unit in the cluster members list.

To change the host name and device priority of a subordinate unit in an operating cluster with virtual domains enabled, log in as the admin administrator, select Global Configuration and go to System > Config > HA to display the cluster members list. Select Edit for any slave (subordinate) unit in the cluster members list.

You can change the host name (Peer) and device priority (Priority) of this subordinate unit. These changes only affect the configuration of the subordinate unit.

The device priority is not synchronized among cluster members. In a functioning cluster you can change device priority to change the priority of any unit in the cluster. The next time the cluster negotiates, the cluster unit with the highest device priority becomes the primary unit.

The device priority range is 0 to 255. The default device priority is 128.

Upgrading cluster firmware

You can upgrade the FortiOS firmware running on an HA cluster in the same manner as upgrading the firmware running on a standalone FortiGate unit. During a normal firmware upgrade, the cluster upgrades the primary unit and all subordinate units to run the new firmware image. The firmware upgrade takes place without interrupting communication through the cluster.

|

|

Upgrading cluster firmware to a new major release (for example upgrading from 5.0 MRx to 5.2.2) is supported for clusters. Make sure you are taking an upgrade path described in the release notes. Even so you should back up your configuration and only perform such a firmware upgrade during a maintenance window. |

To upgrade the firmware without interrupting communication through the cluster, the cluster goes through a series of steps that involve first upgrading the firmware running on the subordinate units, then making one of the subordinate units the primary unit, and finally upgrading the firmware on the former primary unit. These steps are transparent to the user and the network, but depending upon your HA configuration may result in the cluster selecting a new primary unit.

The following sequence describes in detail the steps the cluster goes through during a firmware upgrade and how different HA configuration settings may affect the outcome.

- The administrator uploads a new firmware image from the web-based manager or CLI.

- If the cluster is operating in active-active mode load balancing is turned off.

- The cluster upgrades the firmware running on all of the subordinate units.

- Once the subordinate units have been upgraded, a new primary unit is selected.

This primary unit will be running the new upgraded firmware.

- The cluster now upgrades the firmware of the former primary unit.

If the age of the new primary unit is more than 300 seconds (5 minutes) greater than the age of all other cluster units, the new primary unit continues to operate as the primary unit.

This is the intended behavior but does not usually occur because the age difference of the cluster units is usually less than the cluster age difference margin of 300 seconds. So instead, the cluster negotiates again to select a primary unit as described in An introduction to the FGCP.

You can keep the cluster from negotiating again by reducing the cluster age difference margin using the

ha-uptime-diff-marginoption. However, you should be cautious when reducing the age or other problems may occur. For information about the cluster age difference margin, see An introduction to the FGCP. For more information about changing the cluster age margin, see An introduction to the FGCP.

- If the cluster is operating in active-active mode, load balancing is turned back on.

Changing how the cluster processes firmware upgrades

By default cluster firmware upgrades proceed as uninterruptable upgrades that do not interrupt traffic flow. If required, you can use the following CLI command to change how the cluster handles firmware upgrades. You might want to change this setting if you are finding uninterruptable upgrades take too much time.

config system ha

set uninterruptible-upgrade disable

end

uninterruptible-upgrade is enabled by default. If you disable uninterruptible-upgrade the cluster still upgrades the firmware on all cluster units, but all cluster units are upgraded at once; which takes less time but interrupts communication through the cluster.

Synchronizing the firmware build running on a new cluster unit

If the firmware build running on a FortiGate unit that you add to a cluster is older than the cluster firmware build, you may be able to use the following steps to synchronize the firmware running on the new cluster unit.

This procedure describes re-installing the same firmware build on a cluster to force the cluster to upgrade all cluster units to the same firmware build.

Due to firmware upgrade and synchronization issues, in some cases this procedure may not work. In all cases it will work to install the same firmware build on the new unit as the one that the cluster is running before adding the new unit to the cluster.

To synchronize the firmware build running on a new cluster unit

- Obtain a firmware image that is the same as build already running on the cluster.

- Connect to the cluster using the web-based manager.

- Go to the System Information dashboard widget.

- Select Update beside Firmware Version.

You can also install a newer firmware build.

- Select OK.

After the firmware image is uploaded to the cluster, the primary unit upgrades all cluster units to this firmware build.

Downgrading cluster firmware

For various reasons you may need to downgrade the firmware that a cluster is running. You can use the information in this section to downgrade the firmware version running on a cluster.

In most cases you can downgrade the firmware on an operating cluster using the same steps as for a firmware upgrade. A warning message appears during the downgrade but the downgrade usually works and after the downgrade the cluster continues operating normally with the older firmware image.

Downgrading between some firmware versions, especially if features have changed between the two versions, may not always work without the requirement to fix configuration issues after the downgrade.

Only perform firmware downgrades during maintenance windows and make sure you back up your cluster configuration before the downgrade.

If the firmware downgrade that you are planning may not work without configuration loss or other problems, you can use the following downgrade procedure to make sure your configuration is not lost after the downgrade.

To downgrade cluster firmware

This example shows how to downgrade the cluster shown in Example NAT/Route mode HA network topology. The cluster consists of two cluster units (FGT_ha_1 and FGT_ha_2). The port1 and port2 interfaces are connected to networks and the port3 and port4 interfaces are connected together for the HA heartbeat.

This example, describes separating each unit from the cluster and downgrading the firmware for the standalone FortiGate units. There are several ways you could disconnect units from the cluster. This example describes using the disconnect from cluster function on the cluster members list GUI page.

- Go to the System Information dashboard widget and backup the cluster configuration.

From the CLI use

execute backup config.

- Go to System > Config > HA and for FGT_ha_1 select the Disconnect from cluster icon.

- Select the port2 interface and enter an IP address and netmask of 10.11.101.101/24 and select OK.

From the CLI you can enter the following command (FG600B3908600705 is the serial number of the cluster unit) to be able to manage the standalone FortiGate unit by connecting to the port2 interface with IP address and netmask 10.11.101.101/24.

execute ha disconnect FG600B3908600705 port2 10.11.101.101/24

After FGT_ha_1 is disconnected, FGT_ha_2 continues processing traffic.

- Connect to the FGT_ha_1 web-based manager or CLI using IP address 10.11.101.101/24 and follow normal procedures to downgrade standalone FortiGate unit firmware.

- When the downgrade is complete confirm that the configuration of 620_ha_1 is correct.

- Set the HA mode of FGT_ha_2 to Standalone and follow normal procedures to downgrade standalone FortiGate unit firmware.

Network communication will be interrupted for a short time during the downgrade.

- When the downgrade is complete confirm that the configuration of FGT_ha_2 is correct.

- Set the HA mode of FGT_ha_2 to Active-Passive or the required HA mode.

- Set the HA mode of FGT_ha_1 to the same mode as FGT_ha_2.

If you have not otherwise changed the HA settings of the cluster units and if the firmware downgrades have not affected the configurations the units should negotiate and form cluster running the downgraded firmware.

Backing up and restoring the cluster configuration

You can backup the configuration of the primary unit by logging into the web-based manager or CLI and following normal configuration backup procedures.

The following configuration settings are not synchronized to all cluster units:

- HA override and priority

- The interface configuration of the HA reserved management interface (

config system interface) - The HA reserved management interface default IPv4 route (

ha-mgmt-interface-gateway) - The HA reserved management interface default IPv6 route (

ha-mgmt-interface-gateway6) - The FortiGate unit host name.

To backup these configuration settings for each cluster unit you must log into each cluster unit and backup its configuration.

If you need to restore the configuration of the cluster including the configuration settings that are not synchronized you should first restore the configuration of the primary unit and then restore the configuration of each cluster unit. Alternatively you could log into each cluster unit and manually add the configuration settings that were not restored.

Monitoring cluster units for failover

If the primary unit in the cluster fails, the units in the cluster renegotiate to select a new primary unit. Failure of the primary unit results in the following:

- If SNMP is enabled, the new primary unit sends HA trap messages. The messages indicate a cluster status change, HA heartbeat failure, and HA member down.

- If event logging is enabled and HA activity event is selected, the new primary unit records log messages that show that the unit has become the primary unit.

- If alert email is configured to send email for HA activity events, the new primary unit sends an alert email containing the log message recorded by the event log.

- The cluster contains fewer FortiGate units. The failed primary unit no longer appears on the Cluster Members list.

- The host name and serial number of the primary unit changes. You can see these changes when you log into the web-based manager or CLI.

- The cluster info displayed on the dashboard, cluster members list or from the

get system ha statuscommand changes.

If a subordinate unit fails, the cluster continues to function normally. Failure of a subordinate unit results in the following:

- If event logging is enabled and HA activity event is selected, the primary unit records log messages that show that a subordinate has been removed from the cluster.

- If alert email is configured to send email for HA activity events, the new primary unit sends an alert email containing the log message recorded by the event log.

- The cluster contains fewer FortiGate units. The failed unit no longer appears on the Cluster Members list.

Viewing cluster status from the CLI

Use the get system ha status command to display information about an HA cluster. The command displays general HA configuration settings. The command also displays information about how the cluster unit that you have logged into is operating in the cluster.

Usually you would log into the primary unit CLI using SSH or telnet. In this case the get system ha status command displays information about the primary unit first, and also displays the HA state of the primary unit (the primary unit operates in the work state). However, if you log into the primary unit and then use the execute ha manage command to log into a subordinate unit, (or if you use a console connection to log into a subordinate unit) the get system status command displays information about this subordinate unit first, and also displays the HA state of this subordinate unit. The state of a subordinate unit is work for an active-active cluster and standby for an active-passive cluster.

For a virtual cluster configuration, the get system ha status command displays information about how the cluster unit that you have logged into is operating in virtual cluster 1 and virtual cluster 2. For example, if you connect to the cluster unit that is the primary unit for virtual cluster 1 and the subordinate unit for virtual cluster 2, the output of the get system ha status command shows virtual cluster 1 in the work state and virtual cluster 2 in the standby state. The get system ha status command also displays additional information about virtual cluster 1 and virtual cluster 2.

The command display includes the following fields.

| Fields | Description |

|---|---|

Model

|

The FortiGate model number. |

Mode

|

The HA mode of the cluster: a-a or a-p. |

Group

|

The group ID of the cluster. |

Debug

|

The debug status of the cluster. |

ses_pickup

|

The status of session pickup: enable or disable. |

load balance

|

The status of the load-balance-all keyword: enable or disable. Relevant to active-active clusters only. |

schedule

|

The active-active load balancing schedule. Relevant to active-active clusters only. |

Master Slave

|

Master displays the device priority, host name, serial number, and cluster index of the primary (or master) unit.Slave displays the device priority, host name, serial number, and cluster index of the subordinate (or slave, or backup) unit or units.The list of cluster units changes depending on how you log into the CLI. Usually you would use SSH or telnet to log into the primary unit CLI. In this case the primary unit would be at the top the list followed by the other cluster units. If you use execute ha manage or a console connection to log into a subordinate unit CLI, and then enter get system ha status the subordinate unit that you have logged into appears at the top of the list of cluster units. |

number of vcluster

|

The number of virtual clusters. If virtual domains are not enabled, the cluster has one virtual cluster. If virtual domains are enabled the cluster has two virtual clusters. |

vcluster 1

Master Slave

|

The HA state (hello, work, or standby) and HA heartbeat IP address of the cluster unit that you have logged into in virtual cluster 1. If virtual domains are not enabled, vcluster 1 displays information for the cluster. If virtual domains are enabled, vcluster 1 displays information for virtual cluster 1.The HA heartbeat IP address is 169.254.0.2 if you are logged into the primary unit of virtual cluster 1 and 169.254.0.1 if you are logged into a subordinate unit of virtual cluster 1. vcluster 1 also lists the primary unit (master) and subordinate units (slave) in virtual cluster 1. The list includes the cluster index and serial number of each cluster unit in virtual cluster 1. The cluster unit that you have logged into is at the top of the list.If virtual domains are not enabled and you connect to the primary unit CLI, the HA state of the cluster unit in virtual cluster 1 is work. The display lists the cluster units starting with the primary unit. If virtual domains are not enabled and you connect to a subordinate unit CLI, the HA state of the cluster unit in virtual cluster 1 is standby. The display lists the cluster units starting with the subordinate unit that you have logged into. If virtual domains are enabled and you connect to the virtual cluster 1 primary unit CLI, the HA state of the cluster unit in virtual cluster 1 is work. The display lists the cluster units starting with the virtual cluster 1 primary unit. If virtual domains are enabled and you connect to the virtual cluster 1 subordinate unit CLI, the HA state of the cluster unit in virtual cluster 1 is standby. The display lists the cluster units starting with the subordinate unit that you are logged into. |

vcluster 2

Master Slave

|

vcluster 2 only appears if virtual domains are enabled. vcluster 2 displays the HA state (hello, work, or standby) and HA heartbeat IP address of the cluster unit that you have logged into in virtual cluster 2. The HA heartbeat IP address is 169.254.0.2 if you are logged into the primary unit of virtual cluster 2 and 169.254.0.1 if you are logged into a subordinate unit of virtual cluster 2.vcluster 2 also lists the primary unit (master) and subordinate units (slave) in virtual cluster 2. The list includes the cluster index and serial number of each cluster unit in virtual cluster 2. The cluster unit that you have logged into is at the top of the list.If you connect to the virtual cluster 2 primary unit CLI, the HA state of the cluster unit in virtual cluster 2 is work. The display lists the cluster units starting with the virtual cluster 2 primary unit.If you connect to the virtual cluster 2 subordinate unit CLI, the HA state of the cluster unit in virtual cluster 2 is standby. The display lists the cluster units starting with the subordinate unit that you are logged into. |

Examples

The following example shows get system ha status output for a cluster of two FortiGate-5001SX units operating in active-active mode. The cluster group ID, session pickup, load balance all, and the load balancing schedule are all set to the default values. The device priority of the primary unit is also set to the default value. The device priority of the subordinate unit has been reduced to 100. The host name of the primary unit is 5001_Slot_4. The host name of the subordinate unit in is 5001_Slot_3.

The command output was produced by connecting to the primary unit CLI (host name 5001_Slot_4).

Model: 5000

Mode: a-a

Group: 0

Debug: 0

ses_pickup: disable

load_balance: disable

schedule: round robin

Master:128 5001_Slot_4 FG50012204400045 1

Slave :100 5001_Slot_3 FG50012205400050 0

number of vcluster: 1

vcluster 1: work 169.254.0.2

Master:0 FG50012204400045

Slave :1 FG50012205400050

The following command output was produced by using execute HA manage 0 to log into the subordinate unit CLI of the cluster shown in the previous example. The host name of the subordinate unit is 5001_Slot_3.

Model: 5000

Mode: a-a

Group: 0

Debug: 0

ses_pickup: disable

load_balance: disable

schedule: round robin

Slave :100 5001_Slot_3 FG50012205400050 0

Master:128 5001_Slot_4 FG50012204400045 1

number of vcluster: 1

vcluster 1: work 169.254.0.2

Slave :1 FG50012205400050

Master:0 FG50012204400045

The following example shows get system ha status output for a cluster of three FortiGate-5001 units operating in active-passive mode. The cluster group ID is set to 20 and session pickup is enabled. Load balance all and the load balancing schedule are set to the default value. The device priority of the primary unit is set to 200. The device priorities of the subordinate units are set to 128 and 100. The host name of the primary unit is 5001_Slot_5. The host names of the subordinate units are 5001_Slot_3 and 5001_Slot_4.

Model: 5000

Mode: a-p

Group: 20

Debug: 0

ses_pickup: enable

load_balance: disable

schedule: round robin

Master:200 5001_Slot_5 FG50012206400112 0

Slave :100 5001_Slot_3 FG50012205400050 1

Slave :128 5001_Slot_4 FG50012204400045 2

number of vcluster: 1

vcluster 1: work 169.254.0.1

Master:0 FG50012206400112

Slave :1 FG50012204400045

Slave :2 FG50012205400050

The following example shows get system ha status output for a cluster of two FortiGate-5001 units with virtual clustering enabled. This command output was produced by logging into the primary unit for virtual cluster 1 (hostname: 5001_Slot_4, serial number FG50012204400045).

The virtual clustering output shows that the cluster unit with host name 5001_Slot_4 and serial number FG50012204400045 is operating as the primary unit for virtual cluster 1 and the subordinate unit for virtual cluster 2.

For virtual cluster 1 the cluster unit that you have logged into is operating in the work state and the serial number of the primary unit for virtual cluster 1 is FG50012204400045. For virtual cluster 2 the cluster unit that you have logged into is operating in the standby state and the serial number of the primary unit for virtual cluster 2 is FG50012205400050.

Model: 5000

Mode: a-p

Group: 20

Debug: 0

ses_pickup: enable

load_balance: disable

schedule: round robin

Master:128 5001_Slot_4 FG50012204400045 1

Slave :100 5001_Slot_3 FG50012205400050 0

number of vcluster: 2

vcluster 1: work 169.254.0.2

Master:0 FG50012204400045

Slave :1 FG50012205400050

vcluster 2: standby 169.254.0.1

Slave :1 FG50012204400045

Master:0 FG50012205400050

The following example shows get system ha status output for the same cluster as shown in the previous example after using execute ha manage 0 to log into the primary unit for virtual cluster 2 (hostname: 5001_Slot_3, serial number FG50012205400050).

Model: 5000

Mode: a-p

Group: 20

Debug: 0

ses_pickup: enable

load_balance: disable

schedule: round robin

Slave :100 5001_Slot_3 FG50012205400050 0

Master:128 5001_Slot_4 FG50012204400045 1

number of vcluster: 2

vcluster 1: standby 169.254.0.2

Slave :1 FG50012205400050

Master:0 FG50012204400045

vcluster 2: work 169.254.0.1

Master:0 FG50012205400050

Slave :1 FG50012204400045

The following example shows get system ha status output for a virtual cluster configuration where the cluster unit with hostname: 5001_Slot_4 and serial number FG50012204400045 is the primary unit for both virtual clusters. This command output is produced by logging into cluster unit with host name 5001_Slot_4 and serial number FG50012204400045.

Model: 5000

Mode: a-p

Group: 20

Debug: 0

ses_pickup: enable

load_balance: disable

schedule: round robin

Master:128 5001_Slot_4 FG50012204400045 1

Slave :100 5001_Slot_3 FG50012205400050 0

number of vcluster: 2

vcluster 1: work 169.254.0.2

Master:0 FG50012204400045

Slave :1 FG50012205400050

vcluster 2: work 169.254.0.2

Master:0 FG50012204400045

Slave :1 FG50012205400050

About the HA cluster index and the execute ha manage command

When a cluster starts up, the FortiGate Cluster Protocol (FGCP) assigns a cluster index and a HA heartbeat IP address to each cluster unit based on the serial number of the cluster unit. The FGCP selects the cluster unit with the highest serial number to become the primary unit. The FGCP assigns a cluster index of 0 and an HA heartbeat IP address of 169.254.0.1 to this unit. The FGCP assigns a cluster index of 1 and an HA heartbeat IP address of 169.254.0.2 to the cluster unit with the second highest serial number. If the cluster contains more units, the cluster unit with the third highest serial number is assigned a cluster index of 2 and an HA heartbeat IP address of 169.254.0.3, and so on. You can display the cluster index assigned to each cluster unit using the get system ha status command. Also when you use the execute ha manage command you select a cluster unit to log into by entering its cluster index.

The cluster index and HA heartbeat IP address only change if a unit leaves the cluster or if a new unit joins the cluster. When one of these events happens, the FGCP resets the cluster index and HA heartbeat IP address of each cluster unit according to serial number in the same way as when the cluster first starts up.

Each cluster unit keeps its assigned cluster index and HA heartbeat IP address even as the units take on different roles in the cluster. After the initial cluster index and HA heartbeat IP addresses are set according to serial number, the FGCP checks other primary unit selection criteria such as device priority and monitored interfaces. Checking these criteria could result in selecting a cluster unit without the highest serial number to operate as the primary unit.

Even if the cluster unit without the highest serial number now becomes the primary unit, the cluster indexes and HA heartbeat IP addresses assigned to the individual cluster units do not change. Instead the FGCP assigns a second cluster index, which could be called the operating cluster index, to reflect this role change. The operating cluster index is 0 for the primary unit and 1 and higher for the other units in the cluster. By default both sets of cluster indexes are the same. But if primary unit selection selects the cluster unit that does not have the highest serial number to be the primary unit then this cluster unit is assigned an operating cluster index of 0. The operating cluster index is used by the FGCP only. You can display the operating cluster index assigned to each cluster unit using the get system ha status command. There are no CLI commands that reference the operating cluster index.

|

|

Even though there are two cluster indexes there is only one HA heartbeat IP address and the HA heartbeat address is not affected by a change in the operating cluster index. |

Using the execute ha manage command

When you use the CLI command execute ha manage <index_integer> to connect to the CLI of another cluster unit, the <index_integer> that you enter is the cluster index of the unit that you want to connect to.

Using get system ha status to display cluster indexes

You can display the cluster index assigned to each cluster unit using the CLI command get system ha status. The following example shows the information displayed by the get system ha status command for a cluster consisting of two FortiGate-5001SX units operating in active-passive HA mode with virtual domains not enabled and without virtual clustering.

get system ha status

Model: 5000

Mode: a-p

Group: 0

Debug: 0

ses_pickup: disable

Master:128 5001_slot_7 FG50012205400050 0

Slave :128 5001_slot_11 FG50012204400045 1

number of vcluster: 1

vcluster 1: work 169.254.0.1

Master:0 FG50012205400050

Slave :1 FG50012204400045

In this example, the cluster unit with serial number FG50012205400050 has the highest serial number and so has a cluster index of 0 and the cluster unit with serial number FG50012204400045 has a cluster index of 1. From the CLI of the primary (or master) unit of this cluster you can connect to the CLI of the subordinate (or slave) unit using the following command:

execute ha manage 1

This works because the cluster unit with serial number FG50012204400045 has a cluster index of 1.