HA and failover protection

In FortiGate active-passive HA, the FortiGate Clustering Protocol (FGCP) provides failover protection. This means that an active-passive cluster can provide FortiGate services even when one of the cluster units encounters a problem that would result in complete loss of connectivity for a stand-aloneFortiGate unit. This failover protection provides a backup mechanism that can be used to reduce the risk of unexpected downtime, especially in a mission-critical environment.

The FGCP supports three kinds of failover protection. Device failover automatically replaces a failed device and restarts traffic flow with minimal impact on the network. Link failover maintains traffic flow if a link fails. Session failover resumes communication sessions with minimal loss of data if a device or link failover occurs.

This chapter describes how FGCP failover protection works and provides detailed NAT/Route and Transparent mode packet flow descriptions.

About active-passive failover

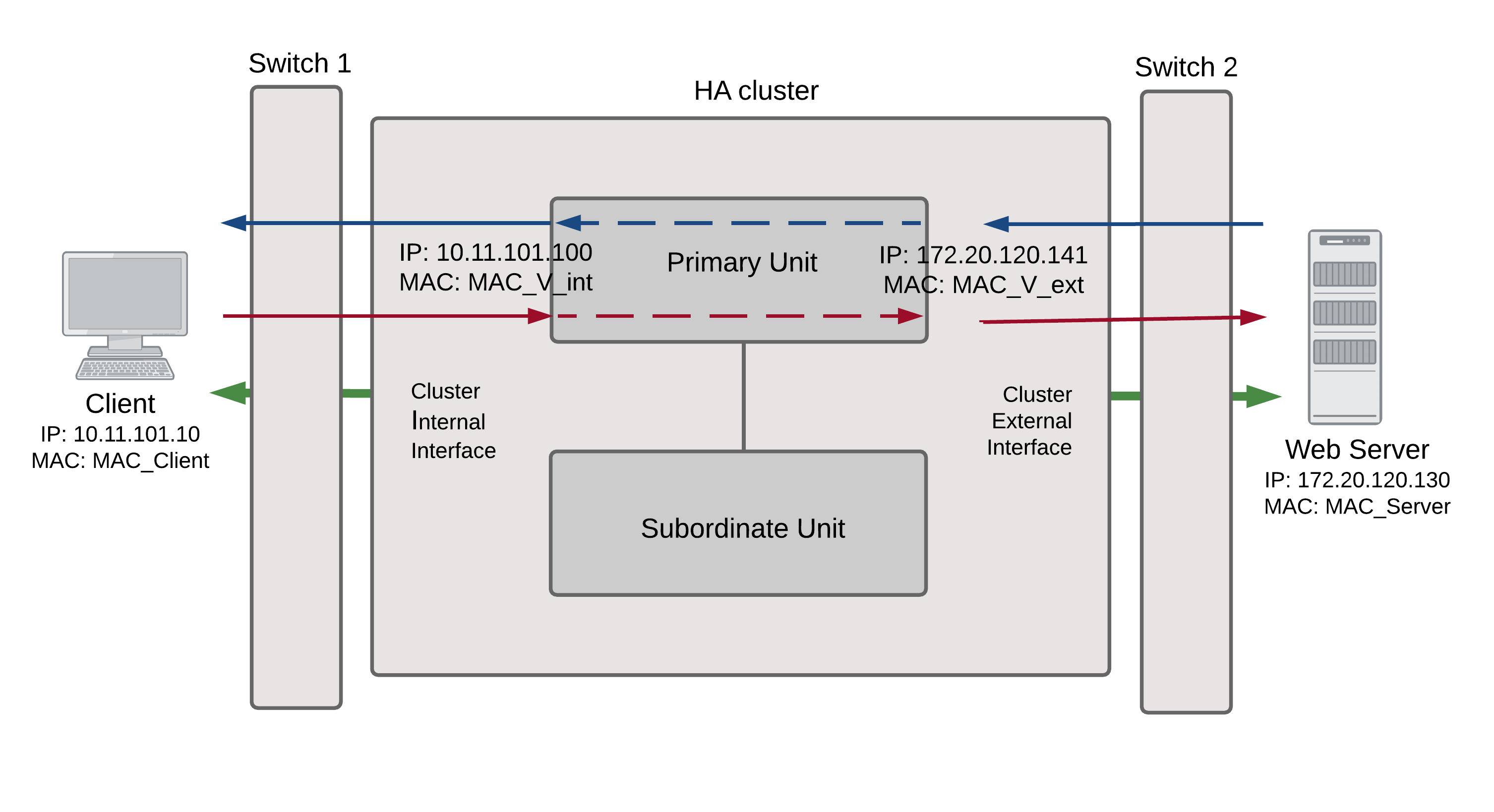

To achieve failover protection in an active-passive cluster, one of the cluster units functions as the primary unit, while the rest of the cluster units are subordinate units, operating in an active stand-by mode. The cluster IP addresses and HA virtual MAC addresses are associated with the cluster interfaces of the primary unit. All traffic directed at the cluster is actually sent to and processed by the primary unit.

While the cluster is functioning, the primary unit functions as the FortiGate network security device for the networks that it is connected to. In addition, the primary unit and subordinate units use the HA heartbeat to keep in constant communication. The subordinate units report their status to the cluster unit and receive and store connection and state table updates.

Device failure

If the primary unit encounters a problem that is severe enough to cause it to fail, the remaining cluster units negotiate to select a new primary unit. This occurs because all of the subordinate units are constantly waiting to negotiate to become primary units. Only the heartbeat packets sent by the primary unit keep the subordinate units from becoming primary units. Each received heartbeat packet resets negotiation timers in the subordinate units. If this timer is allowed to run out because the subordinate units do not receive heartbeat packets from the primary unit, the subordinate units assume that the primary unit has failed, and negotiate to become primary units themselves.

Using the same FGCP negotiation process that occurs when the cluster starts up, after they determine that the primary unit has failed, the subordinate units negotiate amongst themselves to select a new primary unit. The subordinate unit that wins the negotiation becomes the new primary unit with the same MAC and IP addresses as the former primary unit. The new primary unit then sends gratuitous ARP packets out all of its interfaces to inform attached switches to send traffic to the new primary unit. Sessions then resume with the new primary unit.

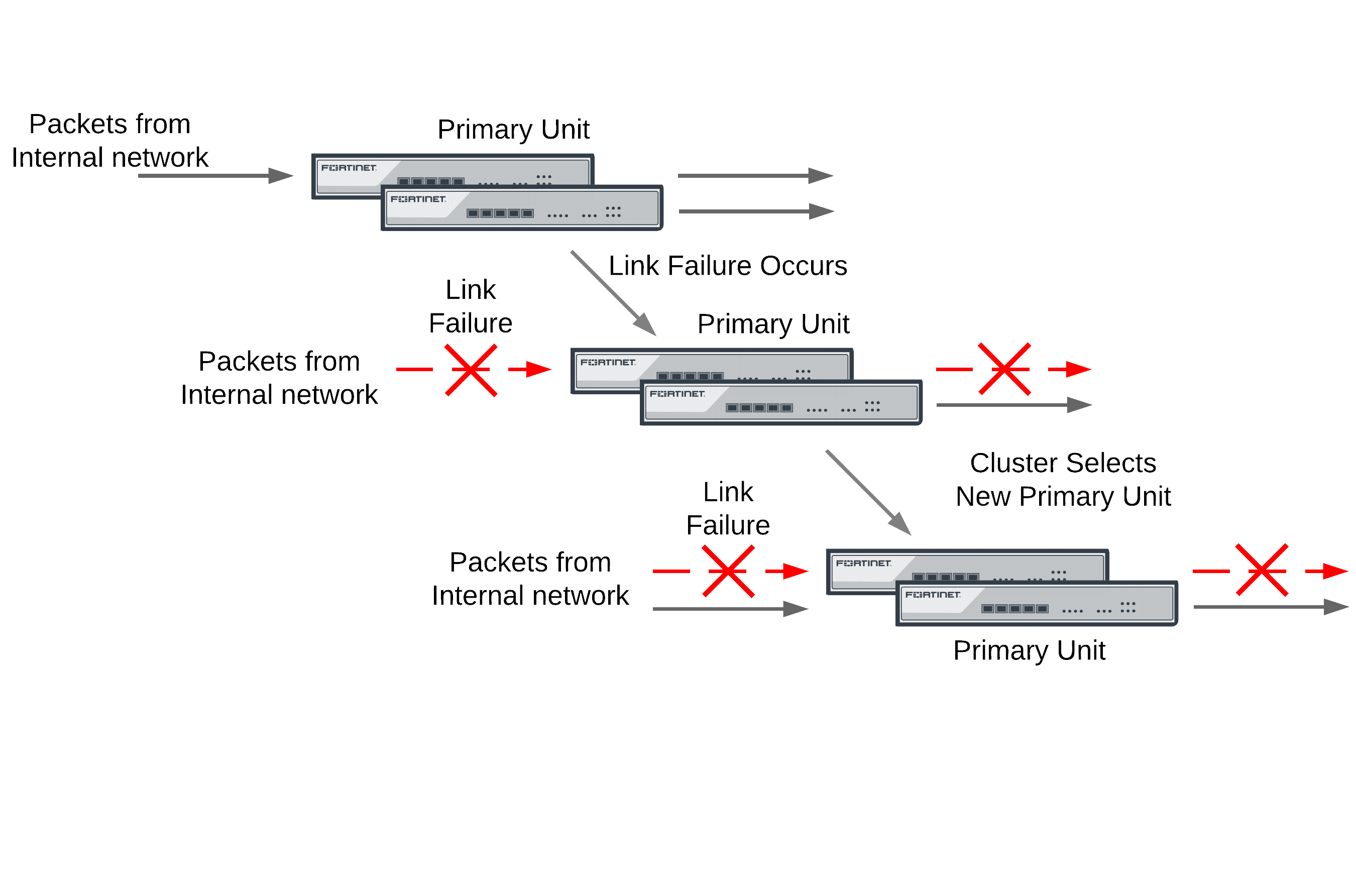

Link failure

If a primary unit interface fails or is disconnected while a cluster is operation, a link failure occurs. When a link failure occurs the cluster units negotiate to select a new primary unit. Since the primary unit has not stopped operating, it participates in the negotiation. The link failure means that a new primary unit must be selected and the cluster unit with the link failure joins the cluster as a subordinate unit.

Just as for a device failover, the new primary unit sends gratuitous arp packets out all of its interfaces to inform attached switches to send traffic to it. Sessions then resume with the new primary unit.

If a subordinate unit experiences a device failure its status in the cluster does not change. However, in future negotiations a cluster unit with a link failure is unlikely to become the primary unit.

Session failover

If you enable session failover (also called session pickup) for the cluster, during cluster operation the primary unit informs the subordinate units of changes to the primary unit connection and state tables, keeping the subordinate units up-to-date with the traffic currently being processed by the cluster.

After a failover the new primary unit recognizes open sessions that were being handled by the cluster. The sessions continue to be processed by the new primary unit and are handled according to their last known state.

If you leave session pickup disabled, the cluster does not keep track of sessions and after a failover, active sessions have to be restarted or resumed.

Primary unit recovery

If a primary unit recovers after a device or link failure, it will operate as a subordinate unit, unless the override CLI keyword is enabled and its device priority is set higher than the unit priority of other cluster units (see HA override).

About active-active failover

HA failover in a cluster running in active-active mode is similar to active-passive failover described above. Active-active subordinate units are constantly waiting to negotiate to become primary units and, if session failover is enabled, continuously receive connection state information from the primary unit. If the primary unit fails, or one of the primary unit interfaces fails, the cluster units use the same mechanisms to detect the failure and to negotiate to select a new primary unit. If session failover is enabled, the new primary unit also maintains communication sessions through the cluster using the shared connection state table.

Active-active HA load balances sessions among all cluster units. For session failover, the cluster must maintain all of these sessions. To load balance sessions, the functioning cluster uses a load balancing schedule to distribute sessions to all cluster units. The shared connection state table tracks the communication sessions being processed by all cluster units (not just the primary unit). After a failover, the new primary unit uses the load balancing schedule to re-distribute all of the communication sessions recorded in the shared connection state table among all of the remaining cluster units. The connections continue to be processed by the cluster, but possibly by a different cluster unit, and are handled according to their last known state.

Device failover

The FGCP provides transparent device failover. Device failover is a basic requirement of any highly available system. Device failover means that if a device fails, a replacement device automatically takes the place of the failed device and continues operating in the same manner as the failed device.

In the case of FortiOS HA, the device is the primary unit. If the primary unit fails, device failover ensures that one of the subordinate units in the cluster automatically takes the place of the primary unit and can continue processing network traffic in the same way as the failed primary unit.

|

|

Device failover does not maintain communication sessions. After a device failover, communication sessions have to be restarted. To maintain communication sessions, you must enable session failover. See Session failover (session pick-up). |

FortiGate HA device failover is supported by the HA heartbeat, virtual MAC addresses, configuration synchronization, route synchronization and IPsec VPN SA synchronization.

The HA heartbeat makes sure that the subordinate units detect a primary unit failure. If the primary unit fails to respond on time to HA heartbeat packets the subordinate units assume that the primary unit has failed and negotiate to select a new primary unit.

The new primary unit takes the place of the failed primary unit and continues functioning in the same way as the failed primary unit. For the new primary unit to continue functioning like the failed primary unit, the new primary unit must be able to reconnect to network devices and the new primary unit must have the same configuration as the failed primary unit.

FortiGate HA uses virtual MAC addresses to reconnect the new primary unit to network devices. The FGCP causes the new primary unit interfaces to acquire the same virtual MAC addresses as the failed primary unit. As a result, the new primary unit has the same network identity as the failed primary unit.

The new primary unit interfaces have different physical connections than the failed primary unit. Both the failed and the new primary unit interfaces are connected to the same switches, but the new primary unit interfaces are connected to different ports on these switches. To make sure that the switches send packets to the new primary unit, the new primary unit interfaces send gratuitous ARP packets to the connected switches. These gratuitous ARP packets notify the switches that the primary unit MAC and IP addresses are on different switch ports and cause the switches to send packets to the ports connected to the new primary unit. In this way, the new primary unit continues to receive packets that would otherwise have been sent to the failed primary unit.

Configuration synchronization means that the new primary unit always has the same configuration as the failed primary unit. As a result the new primary unit operates in exactly the same way as the failed primary unit. If configuration synchronization were not available the new primary unit may not process network traffic in the same way as the failed primary unit.

Kernel routing table synchronization synchronizes the primary unit kernel routing table to all subordinate units so that after a failover the new primary unit does not have to form a completely new routing table. IPsec VPN SA synchronization synchronizes IPsec VPN security associations (SAs) and other IPsec session data so that after a failover the new primary unit can resume IPsec tunnels without having to establish new SAs.

HA heartbeat and communication between cluster units

The HA heartbeat keeps cluster units communicating with each other. The heartbeat consists of hello packets that are sent at regular intervals by the heartbeat interface of all cluster units. These hello packets describe the state of the cluster unit and are used by other cluster units to keep all cluster units synchronized.

HA heartbeat packets are non-TCP packets that use Ethertype values 0x8890, 0x8891, and 0x8890. The default time interval between HA heartbeats is 200 ms. The FGCP uses link-local IP4 addresses in the 169.254.0.x range for HA heartbeat interface IP addresses.

For best results, isolate the heartbeat devices from your user networks by connecting the heartbeat devices to a separate switch that is not connected to any network. If the cluster consists of two FortiGate units you can connect the heartbeat device interfaces directly using a crossover cable. Heartbeat packets contain sensitive information about the cluster configuration. Heartbeat packets may also use a considerable amount of network bandwidth. For these reasons, it is preferable to isolate heartbeat packets from your user networks.

On startup, a FortiGate unit configured for HA operation broadcasts HA heartbeat hello packets from its HA heartbeat interface to find other FortiGate units configured to operate in HA mode. If two or more FortiGate units operating in HA mode connect with each other, they compare HA configurations (HA mode, HA password, and HA group ID). If the HA configurations match, the units negotiate to form a cluster.

While the cluster is operating, the HA heartbeat confirms that all cluster units are functioning normally. The heartbeat also reports the state of all cluster units, including the communication sessions that they are processing.

Heartbeat interfaces

A heartbeat interface is an Ethernet network interface in a cluster that is used by the FGCP for HA heartbeat communications between cluster units.

To change the HA heartbeat configuration go to System > Config > HA and select the FortiGate interfaces to use as HA heartbeat interfaces.

|

|

Do not use a switch port for the HA heartbeat traffic. This configuration is not supported. |

From the CLI enter the following command to make port4 and port5 HA heartbeat interfaces and give both interfaces a heartbeat priority of 150:

config system ha

set hbdev port4 150 port5 150

end

The following example shows how to change the default heartbeat interface configuration so that the port4 and port1 interfaces can be used for HA heartbeat communication and to give the port4 interface the highest heartbeat priority so that port4 is the preferred HA heartbeat interface.

config system ha

set hbdev port4 100 port1 50

end

By default, for most FortiGate models two interfaces are configured to be heartbeat interfaces. You can change the heartbeat interface configuration as required. For example you can select additional or different heartbeat interfaces. You can also select only one heartbeat interface.

In addition to selecting the heartbeat interfaces, you also set the Priority for each heartbeat interface. In all cases, the heartbeat interface with the highest priority is used for all HA heartbeat communication. If the interface fails or becomes disconnected, the selected heartbeat interface that has the next highest priority handles all heartbeat communication.

If more than one heartbeat interface has the same priority, the heartbeat interface with the highest priority that is also highest in the heartbeat interface list is used for all HA heartbeat communication. If this interface fails or becomes disconnected, the selected heartbeat interface with the highest priority that is next highest in the list handles all heartbeat communication.

The default heartbeat interface configuration sets the priority of two heartbeat interfaces to 50. You can accept the default heartbeat interface configuration if one or both of the default heartbeat interfaces are connected. You can select different heartbeat interfaces, select more heartbeat interfaces and change heartbeat priorities according to your requirements.

For the HA cluster to function correctly, you must select at least one heartbeat interface and this interface of all of the cluster units must be connected together. If heartbeat communication is interrupted and cannot failover to a second heartbeat interface, the cluster units will not be able to communicate with each other and more than one cluster unit may become a primary unit. As a result the cluster stops functioning normally because multiple devices on the network may be operating as primary units with the same IP and MAC addresses creating a kind if split brain scenario.

The heartbeat interface priority range is 0 to 512. The default priority when you select a new heartbeat interface is 0. The higher the number the higher the priority.

In most cases you can maintain the default heartbeat interface configuration as long as you can connect the heartbeat interfaces together. Configuring HA heartbeat interfaces is the same for virtual clustering and for standard HA clustering.

You can enable heartbeat communications for physical interfaces, but not for VLAN subinterfaces, IPsec VPN interfaces, redundant interfaces, or for 802.3ad aggregate interfaces. You cannot select these types of interfaces in the heartbeat interface list.

Selecting more heartbeat interfaces increases reliability. If a heartbeat interface fails or is disconnected, the HA heartbeat fails over to the next heartbeat interface.

You can select up to 8 heartbeat interfaces. This limit only applies to FortiGate units with more than 8 physical interfaces.

HA heartbeat traffic can use a considerable amount of network bandwidth. If possible, enable HA heartbeat traffic on interfaces used only for HA heartbeat traffic or on interfaces connected to less busy networks.

Connecting HA heartbeat interfaces

For most FortiGate models if you do not change the heartbeat interface configuration, you can isolate the default heartbeat interfaces of all of the cluster units by connecting them all to the same switch. Use one switch per heartbeat interface. If the cluster consists of two units you can connect the heartbeat interfaces together using crossover cables.

HA heartbeat and data traffic are supported on the same cluster interface. In NAT/Route mode, if you decide to use heartbeat interfaces for processing network traffic or for a management connection, you can assign the interface any IP address. This IP address does not affect HA heartbeat traffic.

In Transparent mode, you can connect the heartbeat interface to your network and enable management access. You would then establish a management connection to the interface using the Transparent mode management IP address. This configuration does not affect HA heartbeat traffic.

Heartbeat packets and heartbeat interface selection

HA heartbeat hello packets are constantly sent by all of the enabled heartbeat interfaces. Using these hello packets, each cluster unit confirms that the other cluster units are still operating. The FGCP selects one of the heartbeat interfaces to be used for communication between the cluster units. The FGCP selects the heartbeat interface for heartbeat communication based on the linkfail states of the heartbeat interfaces, on the priority of the heartbeat interfaces, and on the interface index.

The FGCP checks the linkfail state of all heartbeat interfaces to determine which ones are connected. The FGCP selects one of these connected heartbeat interfaces to be the one used for heartbeat communication. The FGCP selects the connected heartbeat interface with the highest priority for heartbeat communication.

If more than one connected heartbeat interface has the highest priority the FGCP selects the heartbeat interface with the lowest interface index. The web-based manager lists the FortiGate unit interfaces in alphabetical order. This order corresponds to the interface index order with lowest index at the top and highest at the bottom. If more than one heartbeat interface has the highest priority, the FGCP selects the interface that is highest in the heartbeat interface list (or first in alphabetical order) for heartbeat communication.

If the interface that is processing heartbeat traffic fails or becomes disconnected, the FGCP uses the same criteria to select another heartbeat interface for heartbeat communication. If the original heartbeat interface is fixed or reconnected, the FGCP again selects this interface for heartbeat communication.

The HA heartbeat communicates cluster session information, synchronizes the cluster configuration, synchronizes the cluster kernel routing table, and reports individual cluster member status. The HA heartbeat constantly communicates HA status information to make sure that the cluster is operating properly.

Interface index and display order

The web-based manager and CLI display interface names in alphanumeric order. For example, the sort order for a FortiGate unit with 10 interfaces (named port1 through port10) places port10 at the bottom of the list:

- port1

- port2 through 9

- port10

However, interfaces are indexed in hash map order, rather than purely by alphabetic order or purely by interface number value comparisons. As a result, the list is sorted primarily alphabetical by interface name (for example, base1 is before port1), then secondarily by index numbers:

- port1

- port10

- port2 through port9

HA heartbeat interface IP addresses

The FGCP uses link-local IP4 addresses (RFC 3927) in the 169.254.0.x range for HA heartbeat interface IP addresses and for inter-VDOM link interface IP addresses. When a cluster initially starts up, the primary unit heartbeat interface IP address is 169.254.0.1. Subordinate units are assigned heartbeat interface IP addresses in the range 169.254.0.2 to 169.254.0.63. HA inter-VDOM link interfaces on the primary unit are assigned IP addresses 169.254.0.65 and 169.254.0.66.

The ninth line of the following CLI command output shows the HA heartbeat interface IP address of the primary unit.

get system ha status

Model: 620

Mode: a-p

Group: 0

Debug: 0

ses_pickup: disable

Master:150 head_office_upper FG600B3908600825 1

Slave :150 head_office_lower FG600B3908600705 0

number of vcluster: 1

vcluster 1: work 169.254.0.1

Master:0 FG600B3908600825

Slave :1 FG600B3908600705

You can also use the execute traceroute command from the subordinate unit CLI to display HA heartbeat IP addresses and the HA inter-VDOM link IP addresses. For example, use execute ha manage 1 to connect to the subordinate unit CLI and then enter the following command to trace the route to an IP address on your network:

execute traceroute 172.20.20.10

traceroute to 172.20.20.10 (172.20.20.10), 32 hops max, 72 byte packets

1 169.254.0.1 0 ms 0 ms 0 ms

2 169.254.0.66 0 ms 0 ms 0 ms

3 172.20.20.10 0 ms 0 ms 0 ms

Both HA heartbeat and data traffic are supported on the same FortiGate interface. All heartbeat communication takes place on a separate VDOM called vsys_ha. Heartbeat traffic uses a virtual interface called port_ha in the vsys_ha VDOM. Data and heartbeat traffic use the same physical interface, but they’re logically separated into separate VDOMs.

Heartbeat packet Ethertypes

Normal IP packets are 802.3 packets that have an Ethernet type (Ethertype) field value of 0x0800. Ethertype values other than 0x0800 are understood as level2 frames rather than IP packets.

By default, HA heartbeat packets use the following Ethertypes:

- HA heartbeat packets for NAT/Route mode clusters use Ethertype 0x8890. These packets are used by cluster units to find other cluster units and to verify the status of other cluster units while the cluster is operating. You can change the Ethertype of these packets using the

ha-eth-typeoption of theconfig system hacommand. - HA heartbeat packets for Transparent mode clusters use Ethertype 0x8891. These packets are used by cluster units to find other cluster units and to verify the status of other cluster units while the cluster is operating. You can change the Ethertype of these packets using the

hc-eth-typeoption of theconfig system hacommand. - HA telnet sessions between cluster units over HA heartbeat links use Ethertype 0x8893. The telnet sessions are used to synchronize the cluster configurations. Telnet sessions are also used when an administrator uses the

execute ha managecommand to connect from one cluster unit CLI to another. You can change the Ethertype of these packets using thel2ep-eth-typeoption of theconfig system hacommand.

Because heartbeat packets are recognized as level2 frames, the switches and routers on your heartbeat network that connect to heartbeat interfaces must be configured to allow them. If level2 frames are dropped by these network devices, heartbeat traffic will not be allowed between the cluster units.

Some third-party network equipment may use packets with these Ethertypes for other purposes. For example, Cisco N5K/Nexus switches use Ethertype 0x8890 for some functions. When one of these switches receives Ethertype 0x8890 packets from an attached cluster unit, the switch generates CRC errors and the packets are not forwarded. As a result, FortiGate units connected with these switches cannot form a cluster.

In some cases, if the heartbeat interfaces are connected and configured so regular traffic flows but heartbeat traffic is not forwarded, you can change the configuration of the switch that connects the HA heartbeat interfaces to allow level2 frames with Ethertypes 0x8890, 0x8893, and 0x8891 to pass.

Alternatively, you can use the following CLI options to change the Ethertypes of the HA heartbeat packets:

config system ha

set ha-eth-type <ha_ethertype_4-digit_hex

set hc-eth-type <hc_ethertype_4-digit_ex>

set l2ep-eth-type <l2ep_ethertype_4-digit_hex>

end

For example, use the following command to change the Ethertype of the HA heartbeat packets from 0x8890 to 0x8895 and to change the Ethertype of HA Telnet session packets from 0x8891 to 0x889f:

config system ha

set ha-eth-type 8895

set l2ep-eth-type 889f

end

Modifying heartbeat timing

In an HA cluster, if a cluster unit CPU becomes very busy, the cluster unit may not be able to send heartbeat packets on time. If heartbeat packets are not sent on time other units in the cluster may think that the cluster unit has failed and the cluster will experience a failover.

A cluster unit CPU may become very busy if the cluster is subject to a syn flood attack, if network traffic is very heavy, or for other similar reasons. You can use the following CLI commands to configure how the cluster times HA heartbeat packets:

config system ha

set hb-interval <interval_integer>

set hb-lost-threshold <threshold_integer>

set helo-holddown <holddown_integer>

end

Changing the lost heartbeat threshold

The lost heartbeat threshold is the number of consecutive heartbeat packets that are not received from another cluster unit before assuming that the cluster unit has failed. The default value is 6, meaning that if the 6 heartbeat packets are not received from a cluster unit then that cluster unit is considered to have failed. The range is 1 to 60 packets.

If the primary unit does not receive a heartbeat packet from a subordinate unit before the heartbeat threshold expires, the primary unit assumes that the subordinate unit has failed.

If a subordinate unit does not receive a heartbeat packet from the primary unit before the heartbeat threshold expires, the subordinate unit assumes that the primary unit has failed. The subordinate unit then begins negotiating to become the new primary unit.

The lower the hb-lost-threshold the faster a cluster responds when a unit fails. However, sometimes heartbeat packets may not be sent because a cluster unit is very busy. This can lead to a false positive failure detection. To reduce these false positives you can increase the hb-lost-threshold.

Use the following CLI command to increase the lost heartbeat threshold to 12:

config system ha

set hb-lost-threshold 12

end

Changing the heartbeat interval

The heartbeat interval is the time between sending HA heartbeat packets. The heartbeat interval range is 1 to 20 (100*ms). The heartbeat interval default is 2 (200 ms).

A heartbeat interval of 2 means the time between heartbeat packets is 200 ms. Changing the heartbeat interval to 5 changes the time between heartbeat packets to 500 ms (5 * 100ms = 500ms).

The HA heartbeat packets consume more bandwidth if the heartbeat interval is short. But if the heartbeat interval is very long, the cluster is not as sensitive to topology and other network changes.

Use the following CLI command to increase the heartbeat interval to 10:

config system ha

set hb-interval 10

end

The heartbeat interval combines with the lost heartbeat threshold to set how long a cluster unit waits before assuming that another cluster unit has failed and is no longer sending heartbeat packets. By default, if a cluster unit does not receive a heartbeat packet from a cluster unit for 6 * 200 = 1200 milliseconds or 1.2 seconds the cluster unit assumes that the other cluster unit has failed.

You can increase both the heartbeat interval and the lost heartbeat threshold to reduce false positives. For example, increasing the heartbeat interval to 20 and the lost heartbeat threshold to 30 means a failure will be assumed if no heartbeat packets are received after 30 * 2000 milliseconds = 60,000 milliseconds, or 60 seconds.

Use the following CLI command to increase the heartbeat interval to 20 and the lost heartbeat threshold to 30:

config system ha

set hb-lost-threshold 20

set hb-interval 30

end

Changing the time to wait in the helo state

The hello state hold-down time is the number of seconds that a cluster unit waits before changing from hello state to work state. After a failure or when starting up, cluster units operate in the hello state to send and receive heartbeat packets so that all the cluster units can find each other and form a cluster. A cluster unit should change from the hello state to work state after it finds all of the other FortiGate units to form a cluster with. If for some reason all cluster units cannot find each other during the hello state then some cluster units may be joining the cluster after it has formed. This can cause disruptions to the cluster and affect how it operates.

One reason for a delay in all of the cluster units joining the cluster could be the cluster units are located at different sites of if for some other reason communication is delayed between the heartbeat interfaces.

If cluster units are joining your cluster after it has started up of if it takes a while for units to join the cluster you can increase the time that the cluster units wait in the helo state. The hello state hold-down time range is 5 to 300 seconds. The hello state hold-down time default is 20 seconds.

Use the following CLI command to increase the time to wait in the helo state to 1 minute (60 seconds):

config system ha

set helo-holddown 60

end

Enabling or disabling HA heartbeat encryption and authentication

You can enable HA heartbeat encryption and authentication to encrypt and authenticate HA heartbeat packets. HA heartbeat packets should be encrypted and authenticated if the cluster interfaces that send HA heartbeat packets are also connected to your networks.

If HA heartbeat packets are not encrypted the cluster password and changes to the cluster configuration could be exposed and an attacker may be able to sniff HA packets to get cluster information. Enabling HA heartbeat message authentication prevents an attacker from creating false HA heartbeat messages. False HA heartbeat messages could affect the stability of the cluster.

HA heartbeat encryption and authentication are disabled by default. Enabling HA encryption and authentication could reduce cluster performance. Use the following CLI command to enable HA heartbeat encryption and authentication.

config system ha

set authentication enable

set encryption enable

end

HA authentication and encryption uses AES-128 for encryption and SHA1 for authentication.

Cluster virtual MAC addresses

When a cluster is operating, the FGCP assigns virtual MAC addresses to each primary unit interface. HA uses virtual MAC addresses so that if a failover occurs, the new primary unit interfaces will have the same virtual MAC addresses and IP addresses as the failed primary unit. As a result, most network equipment would identify the new primary unit as the exact same device as the failed primary unit.

If the MAC addresses changed after a failover, the network would take longer to recover because all attached network devices would have to learn the new MAC addresses before they could communicate with the cluster.

If a cluster is operating in NAT/Route mode, the FGCP assigns a different virtual MAC address to each primary unit interface. VLAN subinterfaces are assigned the same virtual MAC address as the physical interface that the VLAN subinterface is added to. Redundant interfaces or 802.3ad aggregate interfaces are assigned the virtual MAC address of the first interface in the redundant or aggregate list.

If a cluster is operating in Transparent mode, the FGCP assigns a virtual MAC address for the primary unit management IP address. Since you can connect to the management IP address from any interface, all of the FortiGate interfaces appear to have the same virtual MAC address.

|

|

A MAC address conflict can occur if two clusters are operating on the same network. See Diagnosing packet loss with two FortiGate HA clusters in the same broadcast domain for more information. |

|

|

Subordinate unit MAC addresses do not change. You can verify this by connecting to the subordinate unit CLI and using the get hardware interface nic command to display the MAC addresses of each FortiGate interface. |

|

|

The MAC address of a reserved management interface is not changed to a virtual MAC address. Instead the reserved management interface keeps its original MAC address. |

When the new primary unit is selected after a failover, the primary unit sends gratuitous ARP packets to update the devices connected to the cluster interfaces (usually layer-2 switches) with the virtual MAC address. Gratuitous ARP packets configure connected network devices to associate the cluster virtual MAC addresses and cluster IP address with primary unit physical interfaces and with the layer-2 switch physical interfaces. This is sometimes called using gratuitous ARP packets (sometimes called GARP packets) to train the network. The gratuitous ARP packets sent from the primary unit are intended to make sure that the layer-2 switch forwarding databases (FDBs) are updated as quickly as possible.

Sending gratuitous ARP packets is not required for routers and hosts on the network because the new primary unit will have the same MAC and IP addresses as the failed primary unit. However, since the new primary unit interfaces are connected to different switch interfaces than the failed primary unit, many network switches will update their FDBs more quickly after a failover if the new primary unit sends gratuitous ARP packets.

Changing how the primary unit sends gratuitous ARP packets after a failover

When a failover occurs it is important that the devices connected to the primary unit update their FDBs as quickly as possible to reestablish traffic forwarding.

Depending on your network configuration, you may be able to change the number of gratuitous ARP packets and the time interval between ARP packets to reduce the cluster failover time.

You cannot disable sending gratuitous ARP packets, but you can use the following command to change the number of packets that are sent. For example, enter the following command to send 20 gratuitous ARP packets:

config system ha

set arps 20

end

You can use this command to configure the primary unit to send from 1 to 60 ARP packets. Usually you would not change the default setting of 5. In some cases, however, you might want to reduce the number of gratuitous ARP packets. For example, if your cluster has a large number of VLAN interfaces and virtual domains and because gratuitous ARP packets are broadcast, sending a higher number gratuitous ARP packets may generate a lot of network traffic. As long as the cluster still fails over successfully, you could reduce the number of gratuitous ARP packets that are sent to reduce the amount of traffic produced after a failover.

If failover is taking longer that expected, you may be able to reduce the failover time by increasing the number gratuitous ARP packets sent.

You can also use the following command to change the time interval in seconds between gratuitous ARP packets. For example, enter the following command to change the time between ARP packets to 3 seconds:

config system ha

set arps-interval 3

end

The time interval can be in the range of 1 to 20 seconds. The default is 8 seconds between gratuitous ARP packets. Normally you would not need to change the time interval. However, you could decrease the time to be able send more packets in less time if your cluster takes a long time to failover.

There may also be a number of reasons to set the interval higher. For example, if your cluster has a large number of VLAN interfaces and virtual domains and because gratuitous ARP packets are broadcast, sending gratuitous ARP packets may generate a lot of network traffic. As long as the cluster still fails over successfully you could increase the interval to reduce the amount of traffic produced after a failover.

For more information about gratuitous ARP packets see RFC 826 and RFC 3927.

Disabling gratuitous ARP packets after a failover

You can use the following command to turn off sending gratuitous ARP packets after a failover:

config system ha

set gratuitous-arps disable

end

Sending gratuitous ARP packets is turned on by default.

In most cases you would want to send gratuitous ARP packets because its a reliable way for the cluster to notify the network to send traffic to the new primary unit. However, in some cases, sending gratuitous ARP packets may be less optimal. For example, if you have a cluster of FortiGate units in Transparent mode, after a failover the new primary unit will send gratuitous ARP packets to all of the addresses in its Forwarding Database (FDB). If the FDB has a large number of addresses it may take extra time to send all the packets and the sudden burst of traffic could disrupt the network.

If you choose to disable sending gratuitous ARP packets you must first enable the link-failed-signal setting. The cluster must have some way of informing attached network devices that a failover has occurred.

For more information about the link-failed-signal setting, see Updating MAC forwarding tables when a link failover occurs.

How the virtual MAC address is determined

The virtual MAC address is determined based on following formula:

00-09-0f-09-<group-id_hex>-(<vcluster_integer> + <idx>)

where

<group-id_hex> is the HA Group ID for the cluster converted to hexadecimal.The following table lists the virtual MAC address set for each group ID.

HA group ID in integer and hexadecimal format

| Integer Group ID | Hexadecimal Group ID |

|---|---|

| 0 | 00 |

| 1 | 01 |

| 2 | 02 |

| 3 | 03 |

| 4 | 04 |

| ... | ... |

| 10 | 0a |

| 11 | 0b |

| ... | ... |

| 63 | 3f |

| ... | ... |

| 255 | ff |

<vcluster_integer> is 0 for virtual cluster 1 and 20 for virtual cluster 2. If virtual domains are not enabled, HA sets the virtual cluster to 1 and by default all interfaces are in the root virtual domain. Including virtual cluster and virtual domain factors in the virtual MAC address formula means that the same formula can be used whether or not virtual domains and virtual clustering is enabled.

<idx> is the index number of the interface. Interfaces are numbered from 0 to x (where x is the number of interfaces). Interfaces are numbered according to their has map order. See Interface index and display order. The first interface has an index of 0. The second interface in the list has an index of 1 and so on.

|

|

Only the <idx> part of the virtual MAC address is different for each interface. The <vcluster_integer> would be different for different interfaces if multiple VDOMs have been added. |

|

|

Between FortiOS releases interface indexing may change so the virtual MAC addresses assigned to individual FortiGate interfaces may also change. |

Example virtual MAC addresses

An HA cluster with HA group ID unchanged (default=0) and virtual domains not enabled would have the following virtual MAC addresses for interfaces port1 to port12:

- port1 virtual MAC:

00-09-0f-09-00-00 - port10 virtual MAC:

00-09-0f-09-00-01 - port2 virtual MAC:

00-09-0f-09-00-02 - port3 virtual MAC:

00-09-0f-09-00-03 - port4 virtual MAC:

00-09-0f-09-00-04 - port5 virtual MAC:

00-09-0f-09-00-05 - port6 virtual MAC:

00-09-0f-09-00-06 - port7 virtual MAC:

00-09-0f-09-00-07 - port8 virtual MAC:

00-09-0f-09-00-08 - port9 virtual MAC:

00-09-0f-09-00-09 - port11 virtual MAC:

00-09-0f-09-00-0a - port12 virtual MAC:

00-09-0f-09-00-0b

If the group ID is changed to 34 these virtual MAC addresses change to:

- port1 virtual MAC:

00-09-0f-09-22-00 - port3 virtual MAC:

00-09-0f-09-22-03 - port4 virtual MAC:

00-09-0f-09-22-04 - port5 virtual MAC:

00-09-0f-09-22-05 - port6 virtual MAC:

00-09-0f-09-22-06 - port7 virtual MAC:

00-09-0f-09-22-07 - port8 virtual MAC:

00-09-0f-09-22-08 - port9 virtual MAC:

00-09-0f-09-22-09 - port11 virtual MAC:

00-09-0f-09-22-0a - port12 virtual MAC:

00-09-0f-09-22-0b - port10 virtual MAC:

00-09-0f-09-22-01 - port2 virtual MAC:

00-09-0f-09-22-02

A cluster with virtual domains enabled where the HA group ID has been changed to 23, port5 and port 6 are in the root virtual domain (which is in virtual cluster1), and port7 and port8 are in the vdom_1 virtual domain (which is in virtual cluster 2) would have the following virtual MAC addresses:

- port5 interface virtual MAC:

00-09-0f-09-23-05 - port6 interface virtual MAC:

00-09-0f-09-23-06 - port7 interface virtual MAC:

00-09-0f-09-23-27 - port8 interface virtual MAC:

00-09-0f-09-23-28

Displaying the virtual MAC address

Every FortiGate unit physical interface has two MAC addresses: the current hardware address and the permanent hardware address. The permanent hardware address cannot be changed, it is the actual MAC address of the interface hardware. The current hardware address can be changed. The current hardware address is the address seen by the network. For a FortiGate unit not operating in HA, you can use the following command to change the current hardware address of the port1 interface:

config system interface

edit port1

set macaddr <mac_address>

end

end

For an operating cluster, the current hardware address of each cluster unit interface is changed to the HA virtual MAC address by the FGCP. The macaddr option is not available for a functioning cluster. You cannot change an interface MAC address and you cannot view MAC addresses from the system interface CLI command.

You can use the get hardware nic <interface_name_str> command to display both MAC addresses for any FortiGate interface. This command displays hardware information for the specified interface. Depending on their hardware configuration, this command may display different information for different interfaces. You can use this command to display the current hardware address as Current_HWaddr and the permanent hardware address as Permanent_HWaddr. For some interfaces the current hardware address is displayed as MAC. The command displays a great deal of information about the interface so you may have to scroll the output to find the hardware addresses.

|

|

You can also use the diagnose hardware deviceinfo nic <interface_str> command to display both MAC addresses for any FortiGate interface. |

Before HA configuration the current and permanent hardware addresses are the same. For example for one of the units in Cluster_1:

FGT60B3907503171 # get hardware nic internal

.

.

.

MAC: 02:09:0f:78:18:c9

Permanent_HWaddr: 02:09:0f:78:18:c9

.

.

.

During HA operation the current hardware address becomes the HA virtual MAC address, for example for the units in Cluster_1:

FGT60B3907503171 # get hardware nic internal

.

.

.

MAC: 00:09:0f:09:00:02

Permanent_HWaddr: 02:09:0f:78:18:c9

.

.

.

The following command output for Cluster_2 shows the same current hardware address for port1 as for the internal interface of Cluster_2, indicating a MAC address conflict.

FG300A2904500238 # get hardware nic port1

.

.

.

MAC: 00:09:0f:09:00:02

Permanent_HWaddr: 00:09:0F:85:40:FD

.

.

.

Diagnosing packet loss with two FortiGate HA clusters in the same broadcast domain

A network may experience packet loss when two FortiGate HA clusters have been deployed in the same broadcast domain. Deploying two HA clusters in the same broadcast domain can result in packet loss because of MAC address conflicts. The packet loss can be diagnosed by pinging from one cluster to the other or by pinging both of the clusters from a device within the broadcast domain. You can resolve the MAC address conflict by changing the HA Group ID configuration of the two clusters. The HA Group ID is sometimes also called the Cluster ID.

This section describes a topology that can result in packet loss, how to determine if packets are being lost, and how to correct the problem by changing the HA Group ID.

|

|

Packet loss on a network can also be caused by IP address conflicts. Finding and fixing IP address conflicts can be difficult. However, if you are experiencing packet loss and your network contains two FortiGate HA clusters you can use the information in this article to eliminate one possible source of packet loss. |

Changing the HA group ID to avoid MAC address conflicts

Change the Group ID to change the virtual MAC address of all cluster interfaces. You can change the Group ID from the FortiGate CLI using the following command:

config system ha

set group-id <id_integer>

end

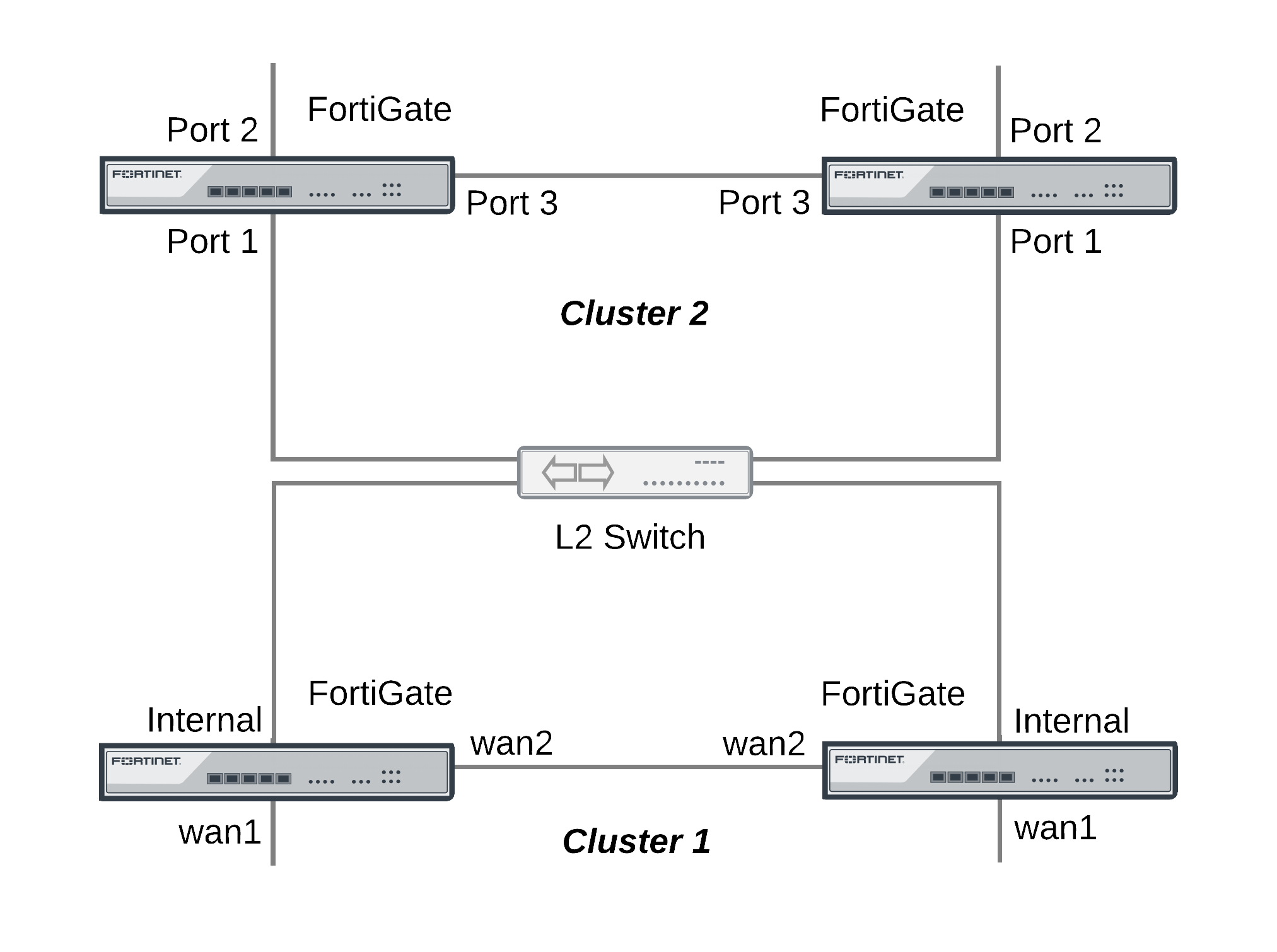

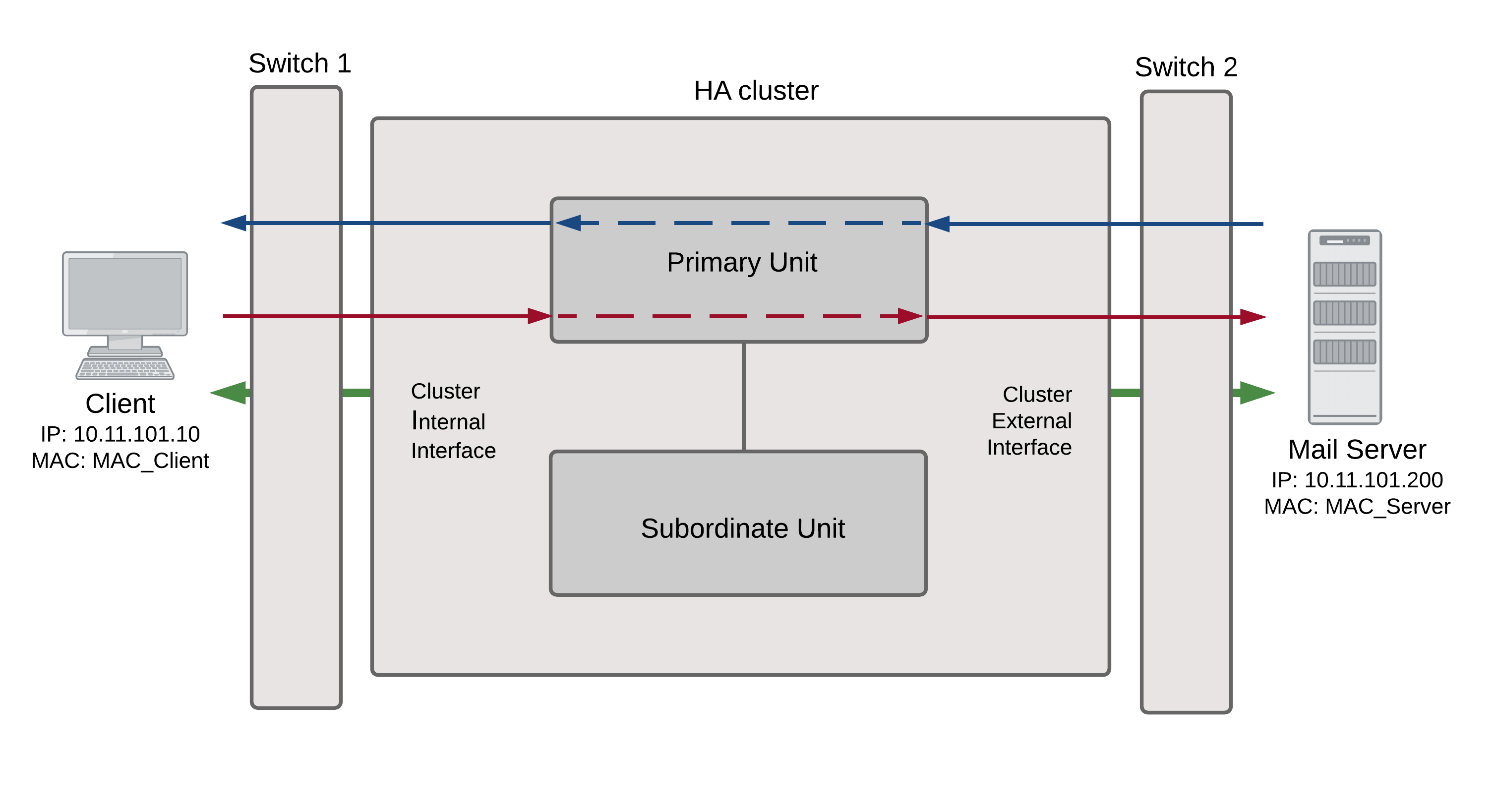

Example topology

The topology below shows two clusters. The Cluster_1 internal interfaces and the Cluster_2 port 1 interfaces are both connected to the same broadcast domain. In this topology the broadcast domain could be an internal network. Both clusters could also be connected to the Internet or to different networks.

Example HA topology with possible MAC address conflicts

Ping testing for packet loss

If the network is experiencing packet loss, it is possible that you will not notice a problem unless you are constantly pinging both HA clusters. During normal operation of the network you also might not notice packet loss because the loss rate may not be severe enough to timeout TCP sessions. Also many common types if TCP traffic, such as web browsing, may not be greatly affected by packet loss. However, packet loss can have a significant effect on real time protocols that deliver audio and video data.

To test for packet loss you can set up two constant ping sessions, one to each cluster. If packet loss is occurring the two ping sessions should show alternating replies and timeouts from each cluster.

| Cluster_1 | Cluster_2 |

|---|---|

reply

|

timeout

|

reply

|

timeout

|

reply

|

timeout

|

timeout

|

reply

|

timeout

|

reply

|

reply

|

timeout

|

reply

|

timeout

|

timeout

|

reply

|

timeout

|

reply

|

timeout

|

reply

|

timeout

|

reply

|

Viewing MAC address conflicts on attached switches

If two HA clusters with the same virtual MAC address are connected to the same broadcast domain (L2 switch or hub), the MAC address will conflict and bounce between the two clusters. This example Cisco switch MAC address table shows the MAC address flapping between different interfaces (1/0/1 and 1/0/4).

1 0009.0f09.0002 DYNAMIC Gi1/0/1

1 0009.0f09.0002 DYNAMIC Gi1/0/4

Synchronizing the configuration

The FGCP uses a combination of incremental and periodic synchronization to make sure that the configuration of all cluster units is synchronized to that of the primary unit.

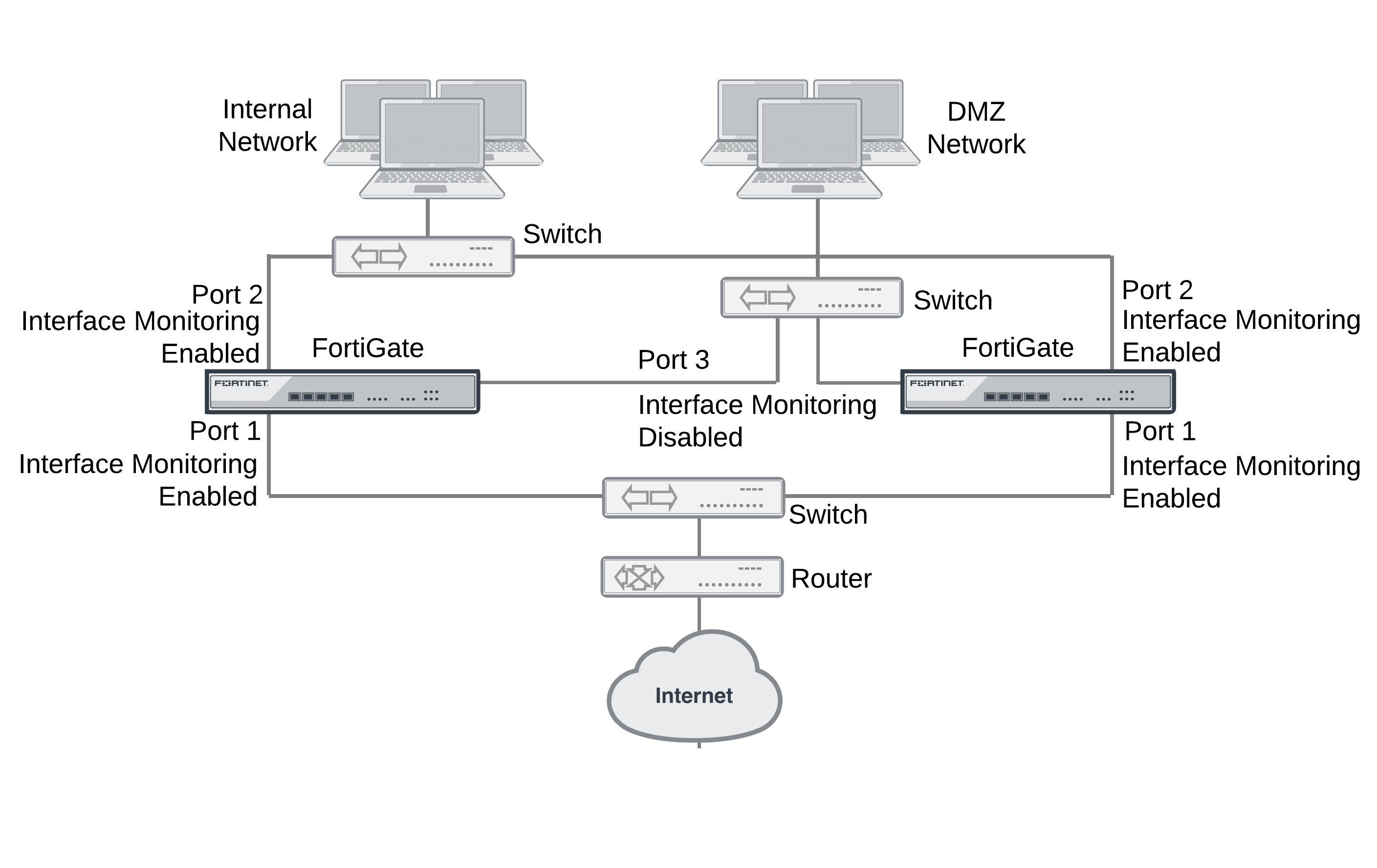

The following settings are not synchronized between cluster units:

- HA override.

- HA device priority.

- The virtual cluster priority.

- The FortiGate unit host name.

- The HA priority setting for a ping server (or dead gateway detection) configuration.

- The system interface settings of the HA reserved management interface.

- The HA default route for the reserved management interface, set using the

ha-mgmt-interface-gatewayoption of theconfig system hacommand.

The primary unit synchronizes all other configuration settings, including the other HA configuration settings.

Disabling automatic configuration synchronization

In some cases you may want to use the following command to disable automatic synchronization of the primary unit configuration to all cluster units.

config system ha

set sync-config disable

end

When this option is disabled the cluster no longer synchronizes configuration changes. If a device failure occurs, the new primary unit may not have the same configuration as the failed primary unit. As a result, the new primary unit may process sessions differently or may not function on the network in the same way.

In most cases you should not disable automatic configuration synchronization. However, if you have disabled this feature you can use the execute ha synchronize command to manually synchronize a subordinate unit’s configuration to that of the primary unit.

You must enter execute ha synchronize commands from the subordinate unit that you want to synchronize with the primary unit. Use the execute ha manage command to access a subordinate unit CLI.

For example, to access the first subordinate unit and force a synchronization at any time, even if automatic synchronization is disabled enter:

execute ha manage 0

execute ha synchronize start

You can use the following command to stop a synchronization that is in progress.

execute ha synchronize stop

Incremental synchronization

When you log into the cluster web-based manager or CLI to make configuration changes, you are actually logging into the primary unit. All of your configuration changes are first made to the primary unit. Incremental synchronization then immediately synchronizes these changes to all of the subordinate units.

When you log into a subordinate unit CLI (for example using execute ha manage) all of the configuration changes that you make to the subordinate unit are also immediately synchronized to all cluster units, including the primary unit, using the same process.

Incremental synchronization also synchronizes other dynamic configuration information such as the DHCP server address lease database, routing table updates, IPsec SAs, MAC address tables, and so on. SeeAn introduction to the FGCP for more information about DHCP server address lease synchronization and Synchronizing kernel routing tables for information about routing table updates.

Whenever a change is made to a cluster unit configuration, incremental synchronization sends the same configuration change to all other cluster units over the HA heartbeat link. An HA synchronization process running on the each cluster unit receives the configuration change and applies it to the cluster unit. The HA synchronization process makes the configuration change by entering a CLI command that appears to be entered by the administrator who made the configuration change in the first place.

Synchronization takes place silently, and no log messages are recorded about the synchronization activity. However, log messages can be recorded by the cluster units when the synchronization process enters CLI commands. You can see these log messages on the subordinate units if you enable event logging and set the minimum severity level to Information and then check the event log messages written by the cluster units when you make a configuration change.

You can also see these log messages on the primary unit if you make configuration changes from a subordinate unit.

Periodic synchronization

Incremental synchronization makes sure that as an administrator makes configuration changes, the configurations of all cluster units remain the same. However, a number of factors could cause one or more cluster units to go out of sync with the primary unit. For example, if you add a new unit to a functioning cluster, the configuration of this new unit will not match the configuration of the other cluster units. Its not practical to use incremental synchronization to change the configuration of the new unit.

Periodic synchronization is a mechanism that looks for synchronization problems and fixes them. Every minute the cluster compares the configuration file checksum of the primary unit with the configuration file checksums of each of the subordinate units. If all subordinate unit checksums are the same as the primary unit checksum, all cluster units are considered synchronized.

If one or more of the subordinate unit checksums is not the same as the primary unit checksum, the subordinate unit configuration is considered out of sync with the primary unit. The checksum of the out of sync subordinate unit is checked again every 15 seconds. This re-checking occurs in case the configurations are out of sync because an incremental configuration sequence has not completed. If the checksums do not match after 5 checks the subordinate unit that is out of sync retrieves the configuration from the primary unit. The subordinate unit then reloads its configuration and resumes operating as a subordinate unit with the same configuration as the primary unit.

The configuration of the subordinate unit is reset in this way because when a subordinate unit configuration gets out of sync with the primary unit configuration there is no efficient way to determine what the configuration differences are and to correct them. Resetting the subordinate unit configuration becomes the most efficient way to resynchronize the subordinate unit.

Synchronization requires that all cluster units run the same FortiOS firmware build. If some cluster units are running different firmware builds, then unstable cluster operation may occur and the cluster units may not be able to synchronize correctly.

|

|

Re-installing the firmware build running on the primary unit forces the primary unit to upgrade all cluster units to the same firmware build. |

Console messages when configuration synchronization succeeds

When a cluster first forms, or when a new unit is added to a cluster as a subordinate unit, the following messages appear on the CLI console to indicate that the unit joined the cluster and had its configuring synchronized with the primary unit.

slave's configuration is not in sync with master's, sequence:0

slave's configuration is not in sync with master's, sequence:1

slave's configuration is not in sync with master's, sequence:2

slave's configuration is not in sync with master's, sequence:3

slave's configuration is not in sync with master's, sequence:4

slave starts to sync with master

logout all admin users

slave succeeded to sync with master

Console messages when configuration synchronization fails

If you connect to the console of a subordinate unit that is out of synchronization with the primary unit, messages similar to the following are displayed.

slave is not in sync with master, sequence:0. (type 0x3)

slave is not in sync with master, sequence:1. (type 0x3)

slave is not in sync with master, sequence:2. (type 0x3)

slave is not in sync with master, sequence:3. (type 0x3)

slave is not in sync with master, sequence:4. (type 0x3)

global compared not matched

If synchronization problems occur the console message sequence may be repeated over and over again. The messages all include a type value (in the example type 0x3). The type value can help Fortinet Support diagnose the synchronization problem.

HA out of sync object messages and the configuration objects that they reference

| Out of Sync Message | Configuration Object |

|---|---|

HA_SYNC_SETTING_CONFIGURATION = 0x03

|

/data/config

|

HA_SYNC_SETTING_AV = 0x10

|

|

HA_SYNC_SETTING_VIR_DB = 0x11

|

/etc/vir

|

HA_SYNC_SETTING_SHARED_LIB = 0x12

|

/data/lib/libav.so

|

HA_SYNC_SETTING_SCAN_UNIT = 0x13

|

/bin/scanunitd

|

HA_SYNC_SETTING_IMAP_PRXY = 0x14

|

/bin/imapd

|

HA_SYNC_SETTING_SMTP_PRXY = 0x15

|

/bin/smtp

|

HA_SYNC_SETTING_POP3_PRXY = 0x16

|

/bin/pop3

|

HA_SYNC_SETTING_HTTP_PRXY = 0x17

|

/bin/thttp

|

HA_SYNC_SETTING_FTP_PRXY = 0x18

|

/bin/ftpd

|

HA_SYNC_SETTING_FCNI = 0x19

|

/etc/fcni.dat

|

HA_SYNC_SETTING_FDNI = 0x1a

|

/etc/fdnservers.dat

|

HA_SYNC_SETTING_FSCI = 0x1b

|

/etc/sci.dat

|

HA_SYNC_SETTING_FSAE = 0x1c

|

/etc/fsae_adgrp.cache

|

HA_SYNC_SETTING_IDS = 0x20

|

/etc/ids.rules

|

HA_SYNC_SETTING_IDSUSER_RULES = 0x21

|

/etc/idsuser.rules

|

HA_SYNC_SETTING_IDSCUSTOM = 0x22

|

|

HA_SYNC_SETTING_IDS_MONITOR = 0x23

|

/bin/ipsmonitor

|

HA_SYNC_SETTING_IDS_SENSOR = 0x24

|

/bin/ipsengine

|

HA_SYNC_SETTING_NIDS_LIB = 0x25

|

/data/lib/libips.so

|

HA_SYNC_SETTING_WEBLISTS = 0x30

|

|

HA_SYNC_SETTING_CONTENTFILTER = 0x31

|

/data/cmdb/webfilter.bword

|

HA_SYNC_SETTING_URLFILTER = 0x32

|

/data/cmdb/webfilter.urlfilter

|

HA_SYNC_SETTING_FTGD_OVRD = 0x33

|

/data/cmdb/webfilter.fgtd-ovrd

|

HA_SYNC_SETTING_FTGD_LRATING = 0x34

|

/data/cmdb/webfilter.fgtd-ovrd

|

HA_SYNC_SETTING_EMAILLISTS = 0x40

|

|

HA_SYNC_SETTING_EMAILCONTENT = 0x41

|

/data/cmdb/spamfilter.bword

|

HA_SYNC_SETTING_EMAILBWLIST = 0x42

|

/data/cmdb/spamfilter.emailbwl

|

HA_SYNC_SETTING_IPBWL = 0x43

|

/data/cmdb/spamfilter.ipbwl

|

HA_SYNC_SETTING_MHEADER = 0x44

|

/data/cmdb/spamfilter.mheader

|

HA_SYNC_SETTING_RBL = 0x45

|

/data/cmdb/spamfilter.rbl

|

HA_SYNC_SETTING_CERT_CONF = 0x50

|

/etc/cert/cert.conf

|

HA_SYNC_SETTING_CERT_CA = 0x51

|

/etc/cert/ca

|

HA_SYNC_SETTING_CERT_LOCAL = 0x52

|

/etc/cert/local

|

HA_SYNC_SETTING_CERT_CRL = 0x53

|

/etc/cert/crl

|

HA_SYNC_SETTING_DB_VER = 0x55

|

|

HA_GET_DETAIL_CSUM = 0x71

|

|

HA_SYNC_CC_SIG = 0x75

|

/etc/cc_sig.dat

|

HA_SYNC_CC_OP = 0x76

|

/etc/cc_op

|

HA_SYNC_CC_MAIN = 0x77

|

/etc/cc_main

|

HA_SYNC_FTGD_CAT_LIST = 0x7a

|

/migadmin/webfilter/ublock/ftgd/ data/

|

Comparing checksums of cluster units

You can use the diagnose sys ha showcsum command to compare the configuration checksums of all cluster units. The output of this command shows checksums labelled global and all as well as checksums for each of the VDOMs including the root VDOM. The get system ha-nonsync-csum command can be used to display similar information; however, this command is intended to be used by FortiManager.

The primary unit and subordinate unit checksums should be the same. If they are not you can use the execute ha synchronize command to force a synchronization.

The following command output is for the primary unit of a cluster that does not have multiple VDOMs enabled:

diagnose sys ha showcsum

is_manage_master()=1, is_root_master()=1

debugzone

global: a0 7f a7 ff ac 00 d5 b6 82 37 cc 13 3e 0b 9b 77

root: 43 72 47 68 7b da 81 17 c8 f5 10 dd fd 6b e9 57

all: c5 90 ed 22 24 3e 96 06 44 35 b6 63 7c 84 88 d5

checksum

global: a0 7f a7 ff ac 00 d5 b6 82 37 cc 13 3e 0b 9b 77

root: 43 72 47 68 7b da 81 17 c8 f5 10 dd fd 6b e9 57

all: c5 90 ed 22 24 3e 96 06 44 35 b6 63 7c 84 88 d5

The following command output is for a subordinate unit of the same cluster:

diagnose sys ha showcsum

is_manage_master()=0, is_root_master()=0

debugzone

global: a0 7f a7 ff ac 00 d5 b6 82 37 cc 13 3e 0b 9b 77

root: 43 72 47 68 7b da 81 17 c8 f5 10 dd fd 6b e9 57

all: c5 90 ed 22 24 3e 96 06 44 35 b6 63 7c 84 88 d5

checksum

global: a0 7f a7 ff ac 00 d5 b6 82 37 cc 13 3e 0b 9b 77

root: 43 72 47 68 7b da 81 17 c8 f5 10 dd fd 6b e9 57

all: c5 90 ed 22 24 3e 96 06 44 35 b6 63 7c 84 88 d5

The following example shows using this command for the primary unit of a cluster with multiple VDOMs. Two VDOMs have been added named test and Eng_vdm.

From the primary unit:

config global

sys ha showcsum

is_manage_master()=1, is_root_master()=1

debugzone

global: 65 75 88 97 2d 58 1b bf 38 d3 3d 52 5b 0e 30 a9

test: a5 16 34 8c 7a 46 d6 a4 1e 1f c8 64 ec 1b 53 fe

root: 3c 12 45 98 69 f2 d8 08 24 cf 02 ea 71 57 a7 01

Eng_vdm: 64 51 7c 58 97 79 b1 b3 b3 ed 5c ec cd 07 74 09

all: 30 68 77 82 a1 5d 13 99 d1 42 a3 2f 9f b9 15 53

checksum

global: 65 75 88 97 2d 58 1b bf 38 d3 3d 52 5b 0e 30 a9

test: a5 16 34 8c 7a 46 d6 a4 1e 1f c8 64 ec 1b 53 fe

root: 3c 12 45 98 69 f2 d8 08 24 cf 02 ea 71 57 a7 01

Eng_vdm: 64 51 7c 58 97 79 b1 b3 b3 ed 5c ec cd 07 74 09

all: 30 68 77 82 a1 5d 13 99 d1 42 a3 2f 9f b9 15 53

From the subordinate unit:

config global

diagnose sys ha showcsum

is_manage_master()=0, is_root_master()=0

debugzone

global: 65 75 88 97 2d 58 1b bf 38 d3 3d 52 5b 0e 30 a9

test: a5 16 34 8c 7a 46 d6 a4 1e 1f c8 64 ec 1b 53 fe

root: 3c 12 45 98 69 f2 d8 08 24 cf 02 ea 71 57 a7 01

Eng_vdm: 64 51 7c 58 97 79 b1 b3 b3 ed 5c ec cd 07 74 09

all: 30 68 77 82 a1 5d 13 99 d1 42 a3 2f 9f b9 15 53

checksum

global: 65 75 88 97 2d 58 1b bf 38 d3 3d 52 5b 0e 30 a9

test: a5 16 34 8c 7a 46 d6 a4 1e 1f c8 64 ec 1b 53 fe

root: 3c 12 45 98 69 f2 d8 08 24 cf 02 ea 71 57 a7 01

Eng_vdm: 64 51 7c 58 97 79 b1 b3 b3 ed 5c ec cd 07 74 09

all: 30 68 77 82 a1 5d 13 99 d1 42 a3 2f 9f b9 15 53

How to diagnose HA out of sync messages

This section describes how to use the commands diagnose sys ha showcsum and diagnose debug to diagnose the cause of HA out of sync messages.

If HA synchronization is not successful, use the following procedures on each cluster unit to find the cause.

To determine why HA synchronization does not occur

- Connect to each cluster unit CLI by connected to the console port.

- Enter the following commands to enable debugging and display HA out of sync messages.

diagnose debug enable

diagnose debug console timestamp enable

diagnose debug application hatalk -1

diagnose debug application hasync -1

Collect the console output and compare the out of sync messages with the information on page 203.

- Enter the following commands to turn off debugging.

diagnose debug disable

diagnose debug reset

To determine what part of the configuration is causing the problem

If the previous procedure displays messages that include sync object 0x30 (for example, HA_SYNC_SETTING_CONFIGURATION = 0x03) there is a synchronization problem with the configuration. Use the following steps to determine the part of the configuration that is causing the problem.

If your cluster consists of two cluster units, use this procedure to capture the configuration checksums for each unit. If your cluster consists of more that two cluster units, repeat this procedure for all cluster units that returned messages that include 0x30 sync object messages.

- Connect to each cluster unit CLI by connected to the console port.

- Enter the following command to turn on terminal capture

diagnose debug enable

- Enter the following command to stop HA synchronization.

execute ha sync stop

- Enter the following command to display configuration checksums.

diagnose sys ha showcsum 1

- Copy the output to a text file.

- Repeat for all affected units.

- Compare the text file from the primary unit with the text file from each cluster unit to find the checksums that do not match.

You can use a diff function to compare text files.

- Repeat steps 4 to 7 for each checksum level:

diagnose sys ha showcsum 2

diagnose sys ha showcsum 3

diagnose sys ha showcsum 4

diagnose sys ha showcsum 5

diagnose sys ha showcsum 6

diagnose sys ha showcsum 7

diagnose sys ha showcsum 8

- When the non-matching checksum is found, attempt to drill down further. This is possible for objects that have sub-components.

For example you can enter the following commands:

diagnose sys ha showcsum system.global

diagnose sys ha showcsum system.interface

Generally it is the first non-matching checksum in one of the levels that is the cause of the synchronization problem.

- Attempt to can remove/change the part of the configuration that is causing the problem. You can do this by making configuration changes from the primary unit or subordinate unit CLI.

- Enter the following commands to start HA configuration and stop debugging:

execute ha sync start

diagnose debug disable

diagnose debug reset

Recalculating the checksums to resolve out of sync messages

Sometimes an error can occur when checksums are being calculated by the cluster. As a result of this calculation error the CLI console could display out of sync error messages even though the cluster is otherwise operating normally. You can also sometimes see checksum calculation errors in diagnose sys ha showcsum command output when the checksums listed in the debugzone output don’t match the checksums in the checksum part of the output.

One solution to this problem could be to re-calculate the checksums. The re-calculated checksums should match and the out of sync error messages should stop appearing.

You can use the following command to re-calculate HA checksums:

diagnose sys ha csum-recalculate [<vdom-name> | global]

Just entering the command without options recalculates all checksums. You can specify a VDOM name to just recalculate the checksums for that VDOM. You can also enter global to recalculate the global checksum.

Synchronizing kernel routing tables

In a functioning cluster, the primary unit keeps all subordinate unit kernel routing tables (also called the forwarding information base FIB) up to date and synchronized with the primary unit. After a failover, because of these routing table updates the new primary unit does not have to populate its kernel routing table before being able to route traffic. This gives the new primary unit time to rebuild its regular routing table after a failover.

Use the following command to view the regular routing table. This table contains all of the configured routes and routes acquired from dynamic routing protocols and so on. This routing table is not synchronized. On subordinate units this command will not produce the same output as on the primary unit.

get router info routing-table

Use the following command to view the kernel routing table (FIB). This is the list of resolved routes actually being used by the FortiOS kernel. The output of this command should be the same on the primary unit and the subordinate units.

get router info kernel

This section describes how clusters handle dynamic routing failover and also describes how to use CLI commands to control the timing of routing table updates of the subordinate unit routing tables from the primary unit.

Controlling how the FGCP synchronizes kernel routing table updates

You can use the following commands to control some of the timing settings that the FGCP uses when synchronizing routing updates from the primary unit to subordinate units and maintaining routes on the primary unit after a failover.

config system ha

set route-hold <hold_integer>

set route-ttl <ttl_integer>

set route-wait <wait_integer>

end

Change how long routes stay in a cluster unit routing table

Change the route-ttl time to control how long routes remain in a cluster unit routing table. The time to live range is 5 to 3600 seconds. The default time to live is 10 seconds.

The time to live controls how long routes remain active in a cluster unit routing table after the cluster unit becomes a primary unit. To maintain communication sessions after a cluster unit becomes a primary unit, routes remain active in the routing table for the route time to live while the new primary unit acquires new routes.

By default, route-ttl is set to 10 which may mean that only a few routes will remain in the routing table after a failover. Normally keeping route-ttl to 10 or reducing the value to 5 is acceptable because acquiring new routes usually occurs very quickly, especially if graceful restart is enabled, so only a minor delay is caused by acquiring new routes.

If the primary unit needs to acquire a very large number of routes, or if for other reasons, there is a delay in acquiring all routes, the primary unit may not be able to maintain all communication sessions.

You can increase the route time to live if you find that communication sessions are lost after a failover so that the primary unit can use synchronized routes that are already in the routing table, instead of waiting to acquire new routes.

Change the time between routing updates

Change the route-hold time to change the time that the primary unit waits between sending routing table updates to subordinate units. The route hold range is 0 to 3600 seconds. The default route hold time is 10 seconds.

To avoid flooding routing table updates to subordinate units, set route-hold to a relatively long time to prevent subsequent updates from occurring too quickly. Flooding routing table updates can affect cluster performance if a great deal of routing information is synchronized between cluster units. Increasing the time between updates means that this data exchange will not have to happen so often.

The route-hold time should be coordinated with the route-wait time.

Change the time the primary unit waits after receiving a routing update

Change the route-wait time to change how long the primary unit waits after receiving routing updates before sending the updates to the subordinate units. For quick routing table updates to occur, set route-wait to a relatively short time so that the primary unit does not hold routing table changes for too long before updating the subordinate units.

The route-wait range is 0 to 3600 seconds. The default route-wait is 0 seconds.

Normally, because the route-wait time is 0 seconds the primary unit sends routing table updates to the subordinate units every time its routing table changes.

Once a routing table update is sent, the primary unit waits the route-hold time before sending the next update.

Usually routing table updates are periodic and sporadic. Subordinate units should receive these changes as soon as possible so route-wait is set to 0 seconds. route-hold can be set to a relatively long time because normally the next route update would not occur for a while.

In some cases, routing table updates can occur in bursts. A large burst of routing table updates can occur if a router or a link on a network fails or changes. When a burst of routing table updates occurs, there is a potential that the primary unit could flood the subordinate units with routing table updates. Flooding routing table updates can affect cluster performance if a great deal of routing information is synchronized between cluster units. Setting route-wait to a longer time reduces the frequency of additional updates are and prevents flooding of routing table updates from occurring.

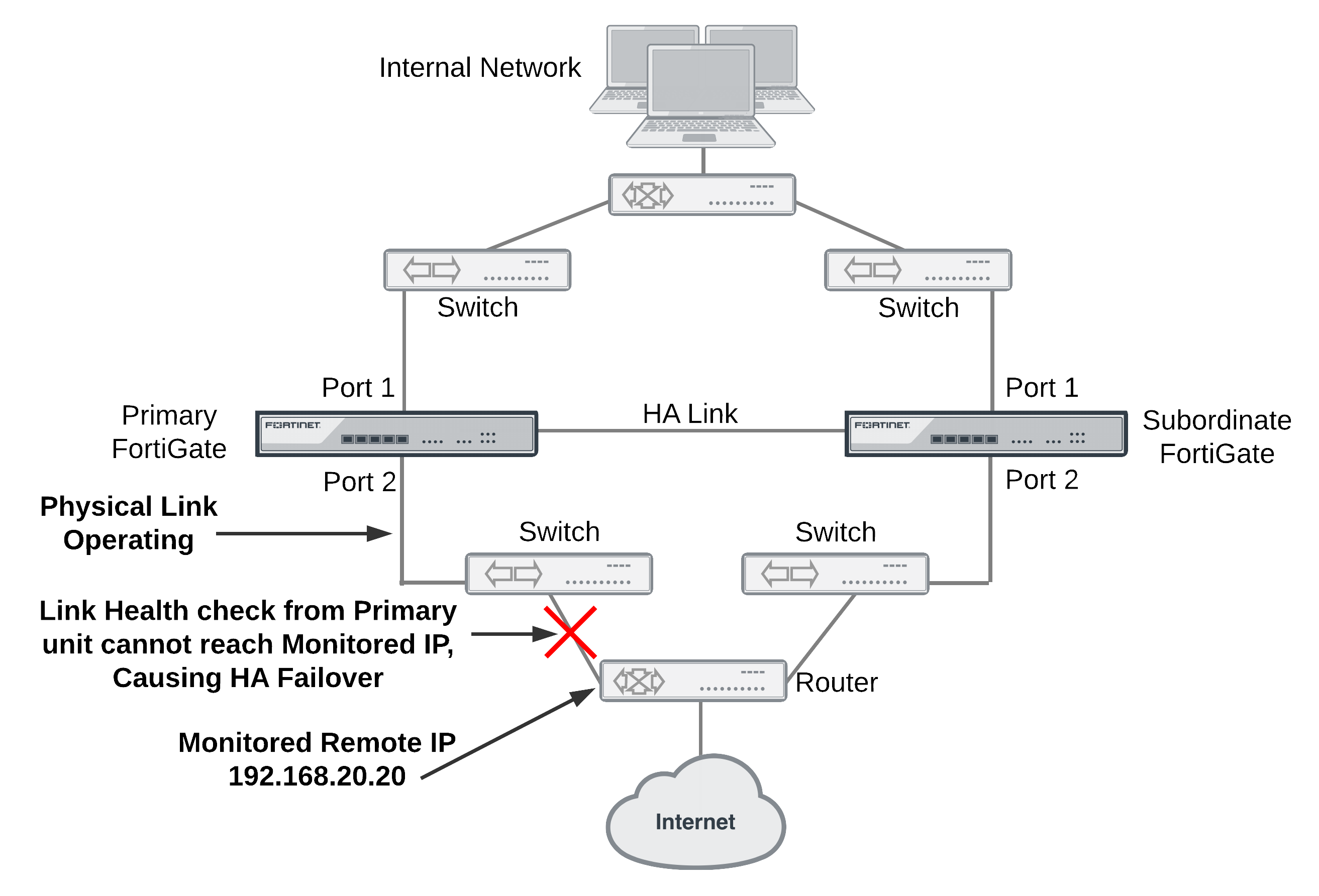

Configuring graceful restart for dynamic routing failover

When an HA failover occurs, neighbor routers will detect that the cluster has failed and remove it from the network until the routing topology stabilizes. During that time the routers may stop sending IP packets to the cluster and communication sessions that would normally be processed by the cluster may time out or be dropped. Also the new primary unit will not receive routing updates and so will not be able to build and maintain its routing database.

You can solve this problem by configuring graceful restart for the dynamic routing protocols that you are using. This section describes configuring graceful restart for OSPF and BGP.

To support graceful restart you should make sure the new primary unit keeps its synchronized routing data long enough to acquire new routing data. You should also increase the HA route time to live, route wait, and route hold values to 60 using the following CLI command:

config system ha

set route-ttl 60

set route-wait 60

set route-hold 60

end

Graceful OSPF restart

You can configure graceful restart (also called nonstop forwarding (NSF)) as described in RFC3623 (Graceful OSPF Restart) to solve the problem of dynamic routing failover. If graceful restart is enabled on neighbor routers, they will keep sending packets to the cluster following the HA failover instead of removing it from the network. The neighboring routers assume that the cluster is experiencing a graceful restart.

After the failover, the new primary unit can continue to process communication sessions using the synchronized routing data received from the failed primary unit before the failover. This gives the new primary unit time to update its routing table after the failover.

You can use the following commands to enable graceful restart or NSF on Cisco routers:

router ospf 1

log-adjacency-changes

nsf ietf helper strict-lsa-checking

If the cluster is running OSPF, use the following command to enable graceful restart for OSFP:

config router ospf

set restart-mode graceful-restart

end

Graceful BGP restart

If the cluster is running BGP only the primary unit keeps BGP peering connections. When a failover occurs, the BGP peering needs to be reestablished. This will happen if you enable BGP graceful restart which causes the adjacent routers to keep the routes active while the BGP peering is restarted by the new primary unit.

|

|

Enabling BGP graceful restart causes the FortiGate's BGP process to restart which can temporarily disrupt traffic through the cluster. So normally you should wait for a quiet time or a maintenance period to enable BGP graceful restart. |

Use the following command to enable graceful restart for BGP and set some graceful restart options.

config router bgp

set graceful-restart enable

set graceful-restart-time 120

set graceful-stalepath-time 360

set graceful-update-delay 120

end

Notifing BGP neighbors when graceful restart is enabled

You can add BGP neighbors and configure the cluster unit to notify these neighbors that it supports graceful restart.

config router bgp

config neighbor

edit <neighbor_address_Ipv4>

set capability-graceful-restart enable

end

end

Bidirectional Forwarding Detection (BFD) enabled BGP graceful restart

You can add a BFD enabled BGP neighbor as a static BFD neighbor using the following command. This example shows how to add a BFD neighbor with IP address 172.20.121.23 that is on the network connected to port4:

config router bfd

config neighbor

edit 172.20.121.23

set port4

end

end

The FGCP supports graceful restart of BFD enabled BGP neighbors. The config router bfd command is needed as the BGP auto-start timer is 5 seconds. After HA failover, BGP on the new primary unit has to wait for 5 seconds to connect to its neighbors, and then register BFD requests after establishing the connections. With static BFD neighbors, BFD requests and sessions can be created as soon as possible after the failover. The new command get router info bfd requests shows the BFD peer requests.

A BFD session created for a static BFD neighbor/peer request will initialize its state as "INIT" instead of "DOWN" and its detection time asbfd-required-min-rx * bfd-detect-mult milliseconds.

When a BFD control packet with nonzero your_discr is received, if no session can be found to match the your_discr, instead of discarding the packet, other fields in the packet, such as addressing information, are used to choose one session that was just initialized, with zero as its remote discriminator.

When a BFD session in the up state receives a control packet with zero as your_discr and down as the state, the session will change its state into down but will not notify this down event to BGP and/or other registered clients.

Synchronizing IPsec VPN SAs

The FGCP synchronizes IPsec security associations (SAs) between cluster members so that if a failover occurs, the cluster can resume IPsec sessions without having to establish new SAs. The result is improved failover performance because IPsec sessions are not interrupted to establish new SAs. Also, establishing a large number of SAs can reduce cluster performance.

The FGCP implements slightly different synchronization mechanisms for IKEv1 and IKEv2.

Synchronizing SAs for IKEv1

When an SA is synchronized to the subordinate units. the sequence number is set to the maximum sequence number. After a failover, all inbound traffic that connects with the new primary unit and uses the SA will be accepted without needing to re-key. However, first outbound packet to use the SA causes the sequence number to overflow and so causes the new primary unit to re-key the SA.

Please note the following:

- The cluster synchronizes all IPsec SAs.

- IPsec SAs are not synchronized until the IKE process has finished synchronizing the ISAKMP SAs. This is required in for dialup tunnels since it is the synchronizing of the ISAKMP SA that creates the dialup tunnel.

- A dialup interface is created as soon as the phase1 is complete. This ensures that the when HA synchronizes phase1 information the dialup name is included.

- If the IKE process re-starts for any reason it deletes any dialup tunnels that exist. This forces the peer to re-key them.

- IPsec SA deletion happens immediately. Routes associated with a dialup tunnel that is being deleted are cleaned up synchronously as part of the delete, rather than waiting for the SA hard-expiry.