Example virtual clustering with two VDOMs and VDOM partitioning

This section describes how to configure the example virtual clustering configuration shown below. This configuration includes two virtual domains, root and Eng_vdm and includes VDOM partitioning that sends all root VDOM traffic to FGT_ha_1 and all Eng_vdom VDOM traffic to FGT_ha_2. The traffic from the internal network and the engineering network is distributed between the two FortiGates in the virtual cluster. If one of the cluster units fails, the remaining unit will process traffic for both VDOMs.

The procedures in this example describe some of many possible sequences of steps for configuring virtual clustering. For simplicity many of these procedures assume that you are starting with new FortiGates set to the factory default configuration. However, this is not a requirement for a successful HA deployment. FortiGate HA is flexible enough to support a successful configuration from many different starting points.

Example virtual clustering network topology

The following figure shows a typical FortiGate HA virtual cluster consisting of two FortiGates (FGT_ha_1 and FGT_ha_2) connected to and internal network, an engineering network and the Internet. To simplify the diagram the heartbeat connections are not shown.

The traffic from the internal network is processed by the root VDOM, which includes the port1 and port2 interfaces. The traffic from the engineering network is processed by the Eng_vdm VDOM, which includes the port5 and port6 interfaces. VDOM partitioning is configured so that all traffic from the internal network is processed by FGT_ha_1 and all traffic from the engineering network is processed by FGT_ha_2.

This virtual cluster uses the default FortiGate heartbeat interfaces (port3 and port4).

Example virtual cluster showing VDOM partitioning

General configuration steps

The section includes GUI and CLI procedures. These procedures assume that the FortiGates are running the same FortiOS firmware build and are set to the factory default configuration.

General configuration steps

- Apply licenses to the FortiGates to become the cluster.

- Configure the FortiGates for HA operation.

- Optionally change each unit’s host name.

- Configure HA.

- Connect the cluster to the network.

- Configure VDOM settings for the cluster:

- Enable multiple VDOMs.

- Add the Eng_vdm VDOM.

- Add port5 and port6 to the Eng_vdom.

- Configure VDOM partitioning.

- Confirm that the cluster units are operating as a virtual cluster and add basic configuration settings to the cluster.

- View cluster status from the GUI or CLI.

- Add a password for the admin administrative account.

- Change the IP addresses and netmasks of the port1, port2, port5, and port6 interfaces.

- Add a default routes to each VDOM.

Configuring virtual clustering with two VDOMs and VDOM partitioning - GUI

These procedures assume you are starting with two FortiGates with factory default settings.

To configure the FortiGates for HA operation

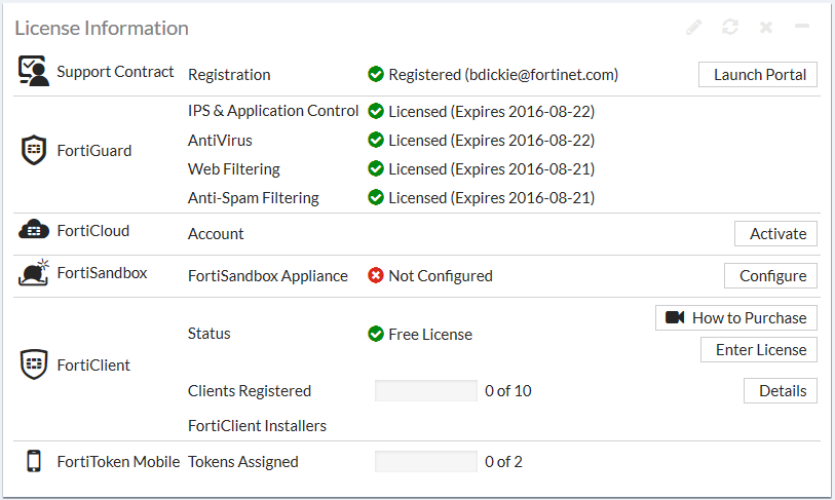

- Register and apply licenses to the FortiGate. This includes FortiCloud activation and FortiClient licensing, and entering a license key if you purchased more than 10 Virtual Domains (VDOMS). All of the FortiGates in a cluster must have the same level of licensing.

- You can also install any third-party certificates on the primary FortiGate before forming the cluster. Once the cluster is formed third-party certificates are synchronized to the backup FortiGate.

We recommend that you add FortiToken licenses and FortiTokens to the primary unit after the cluster has formed. - On the System Information dashboard widget, beside Host Name select Change.

- Enter a new Host Name for this FortiGate.

| New Name | FGT_ha_1 |

- Select OK.

- Go to System > HA and change the following settings.

| Mode | Active-Passive |

| Group Name | vexample2.com |

| Password | vHA_pass_2 |

- Select OK.

The FortiGate negotiates to establish an HA cluster. When you select OK you may temporarily lose connectivity with the FortiGate as the HA cluster negotiates and the FGCP changes the MAC address of the FortiGate interfaces (see Cluster virtual MAC addresses). The MAC addresses of the FortiGate interfaces change to the following virtual MAC addresses:

- port1 interface virtual MAC:

00-09-0f-09-00-00 - port10 interface virtual MAC:

00-09-0f-09-00-01 - port11 interface virtual MAC:

00-09-0f-09-00-02 - port12 interface virtual MAC:

00-09-0f-09-00-03 - port13 interface virtual MAC:

00-09-0f-09-00-04 - port14 interface virtual MAC:

00-09-0f-09-00-05 - port15 interface virtual MAC:

00-09-0f-09-00-06 - port16 interface virtual MAC:

00-09-0f-09-00-07 - port17 interface virtual MAC:

00-09-0f-09-00-08 - port18 interface virtual MAC:

00-09-0f-09-00-09 - port19 interface virtual MAC:

00-09-0f-09-00-0a - port2 interface virtual MAC:

00-09-0f-09-00-0b - port20 interface virtual MAC:

00-09-0f-09-00-0c - port3 interface virtual MAC:

00-09-0f-09-00-0d - port4 interface virtual MAC:

00-09-0f-09-00-0e - port5 interface virtual MAC:

00-09-0f-09-00-0f - port6 interface virtual MAC:

00-09-0f-09-00-10 - port7 interface virtual MAC:

00-09-0f-09-00-11 - port8 interface virtual MAC:

00-09-0f-09-00-12 - port9 interface virtual MAC:

00-09-0f-09-00-13

To be able to reconnect sooner, you can update the ARP table of your management PC by deleting the ARP table entry for the FortiGate (or just deleting all arp table entries). You may be able to delete the arp table of your management PC from a command prompt using a command similar to

arp -d.You can use the

get hardware nic(ordiagnose hardware deviceinfo nic) CLI command to view the virtual MAC address of any FortiGate interface. For example, use the following command to view the port1 interface virtual MAC address (Current_HWaddr) and the port1 permanent MAC address (Permanent_HWaddr):

get hardware nic port1

.

.

.

MAC: 00:09:0f:09:00:00

Permanent_HWaddr: 02:09:0f:78:18:c9

.

.

.

- Power off the first FortiGate.

- Repeat these steps for the second FortiGate.

Set the second FortiGate host name to:

| New Name | FGT_ha_2 |

To connect the cluster to the network

- Connect the port1 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the Internet.

- Connect the port5 interfaces of FGT_ha_1 and FGT_ha_2 to switch connected to the Internet.

You could use the same switch for the port1 and port5 interfaces.

- Connect the port2 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the internal network.

- Connect the port6 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the engineering network.

- Connect the port3 interfaces of the cluster units together. You can use a crossover Ethernet cable or regular Ethernet cables and a switch.

- Connect the port4 interfaces of the cluster units together. You can use a crossover Ethernet cable or regular Ethernet cables and a switch.

- Power on the cluster units.

The units start and negotiate to choose the primary unit and the subordinate unit. This negotiation occurs with no user intervention.

When negotiation is complete you can continue.

To configure VDOM settings for the cluster

- Log into the GUI.

- On the System Information dashboard widget, beside Virtual Domain select Enable.

- Select OK and then log back into the GUI.

- Go to System > VDOM and select Create New to add a new VDOM.

| Virtual Domain | Eng_vdm |

- Go to Network > Interfaces.

- Edit the port5 interface, add it to the Eng_vdm VDOM and configure other interface settings:

| Alias | Engineering_external |

| Virtual Domain | Eng_vdm |

| IP/Netmask | 172.20.120.143/24 |

- Select OK.

- Edit the port6 interface, add it to the Eng_vdm VDOM and configure other interface settings:

| Alias | Engineering_internal |

| Virtual Domain | Eng_vdm |

| IP/Netmask | 10.120.101.100/24 |

| Administrative Access | HTTPS, PING, SSH |

- Select OK.

To add a default route to each VDOM

- Use the VDOM menu to enter the root VDOM.

- Go to Network > Static Routes.

- Change the default route.

| Destination | 0.0.0.0/0.0.0.0 |

| Device | port1 |

| Gateway | 172.20.120.2 |

| Administrative Distance | 10 |

- Select Global.

- Enter the Eng_vdm VDOM.

- Go to Network > Static Routes.

- Change the default route.

| Destination IP/Mask | 0.0.0.0/0.0.0.0 |

| Device | port5 |

| Gateway | 172.20.120.2 |

| Distance | 10 |

To configure VDOM partitioning

- Go to System > HA.

The cluster members shows two cluster units in Virtual Cluster 1.

- Edit the cluster unit with the Role of MASTER.

- Change VDOM partitioning to move the Eng_vdm to the Virtual Cluster 2 list.

- Select OK.

- Change the Virtual Cluster 1 and Virtual Cluster 2 device priorities for each cluster unit to the following:

| Device Priority | ||

| Host Name | Virtual Cluster 1 | Virtual Cluster 2 |

| FGT_ha_1 | 200 | 100 |

| FGT_ha_2 | 100 | 200 |

You can do this by editing the HA configurations of each cluster unit in the cluster members list and changing device priorities.

Since the device priority of Virtual Cluster 1 is highest for FGT_ha_1 and since the root VDOM is in Virtual Cluster 1, all traffic for the root VDOM is processed by FGT_ha_1.

Since the device priority of Virtual Cluster 2 is highest for FGT_ha_2 and since the Eng_vdm VDOM is in Virtual Cluster 2, all traffic for the Eng_vdm VDOM is processed by FGT_ha_2.

To view cluster status and verify the VDOM partitioning configuration

- Log into the GUI.

- Go to System > HA.

The cluster members list should show the following:

- Virtual Cluster 1 contains the root VDOM.

- FGT_ha_1 is the primary unit for Virtual Cluster 1.

- Virtual Cluster 2 contains the Eng_vdm VDOM.

- FGT_ha_2 is the primary unit for Virtual Cluster 2.

To test the VDOM partitioning configuration

You can do the following to confirm that traffic for the root VDOM is processed by FGT_ha_1 and traffic for the Eng_vdm is processed by FGT_ha_2.

- Log into the GUI by connecting to port2 using IP address 10.11.101.100.

You will log into FGT_ha_1 because port2 is in the root VDOM and all traffic for this VDOM is processed by FGT_ha_1. You can confirm that you have logged into FGT_ha_1 by checking the host name on the System Information dashboard widget.

- Log into the GUI by connecting to port6 using IP address 10.12.101.100.

You will log into FGT_ha_2 because port6 is in the Eng_vdm VDOM and all traffic for this VDOM is processed by FGT_ha_2.

- Add security policies to the root virtual domain that allows communication from the internal network to the Internet and connect to the Internet from the internal network.

- Log into the GUI and go to System > HA and select View HA Statistics.

The statistics display shows more active sessions, total packets, network utilization, and total bytes for the FGT_ha_1 unit.

- Add security policies to the Eng_vdm virtual domain that allow communication from the engineering network to the Internet and connect to the Internet from the engineering network.

- Log into the GUI and go to System > HA and select View HA Statistics.

The statistics display shows more active sessions, total packets, network utilization, and total bytes for the FGT_ha_2 unit.

Configuring virtual clustering with two VDOMs and VDOM partitioning - CLI

These procedures assume you are starting with two FortiGates with factory default settings.

To configure the FortiGates for HA operation

- Register and apply licenses to the FortiGate. This includes FortiCloud activation and FortiClient licensing, and entering a license key if you purchased more than 10 Virtual Domains (VDOMS). All of the FortiGates in a cluster must have the same level of licensing.

- You can also install any third-party certificates on the primary FortiGate before forming the cluster. Once the cluster is formed third-party certificates are synchronized to the backup FortiGate.

We recommend that you add FortiToken licenses and FortiTokens to the primary unit after the cluster has formed. - Change the host name for this FortiGate:

config system global

set hostname FGT_ha_1

end

- Configure HA settings.

config system ha

set mode a-p

set group-name vexample2.com

set password vHA_pass_2

end

The FortiGate negotiates to establish an HA cluster. You may temporarily lose connectivity with the FortiGate as the HA cluster negotiates and the FGCP changes the MAC address of the FortiGate interfaces (see Cluster virtual MAC addresses). The MAC addresses of the FortiGate interfaces change to the following virtual MAC addresses:

- port1 interface virtual MAC:

00-09-0f-09-00-00 - port10 interface virtual MAC:

00-09-0f-09-00-01 - port11 interface virtual MAC:

00-09-0f-09-00-02 - port12 interface virtual MAC:

00-09-0f-09-00-03 - port13 interface virtual MAC:

00-09-0f-09-00-04 - port14 interface virtual MAC:

00-09-0f-09-00-05 - port15 interface virtual MAC:

00-09-0f-09-00-06 - port16 interface virtual MAC:

00-09-0f-09-00-07 - port17 interface virtual MAC:

00-09-0f-09-00-08 - port18 interface virtual MAC:

00-09-0f-09-00-09 - port19 interface virtual MAC:

00-09-0f-09-00-0a - port2 interface virtual MAC:

00-09-0f-09-00-0b - port20 interface virtual MAC:

00-09-0f-09-00-0c - port3 interface virtual MAC:

00-09-0f-09-00-0d - port4 interface virtual MAC:

00-09-0f-09-00-0e - port5 interface virtual MAC:

00-09-0f-09-00-0f - port6 interface virtual MAC:

00-09-0f-09-00-10 - port7 interface virtual MAC:

00-09-0f-09-00-11 - port8 interface virtual MAC:

00-09-0f-09-00-12 - port9 interface virtual MAC:

00-09-0f-09-00-13

To be able to reconnect sooner, you can update the ARP table of your management PC by deleting the ARP table entry for the FortiGate (or just deleting all arp table entries). You may be able to delete the arp table of your management PC from a command prompt using a command similar to

arp -d.You can use the

get hardware nic(ordiagnose hardware deviceinfo nic) CLI command to view the virtual MAC address of any FortiGate interface. For example, use the following command to view the port1 interface virtual MAC address (Current_HWaddr) and the port1 permanent MAC address (Permanent_HWaddr):

get hardware nic port1

.

.

.

MAC: 00:09:0f:09:00:00

Permanent_HWaddr: 02:09:0f:78:18:c9

.

.

.

- Power off the first FortiGate.

- Repeat these steps for the second FortiGate.

Set the other FortiGate host name to:

config system global

set hostname FGT_ha_2

end

To connect the cluster to the network

- Connect the port1 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the Internet.

- Connect the port5 interfaces of FGT_ha_1 and FGT_ha_2 to switch connected to the Internet.

You could use the same switch for port1 and port5.

- Connect the port2 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the internal network.

- Connect the port6 interfaces of FGT_ha_1 and FGT_ha_2 to a switch connected to the engineering network.

- Connect the port3 interfaces of the cluster units together. You can use a crossover Ethernet cable or regular Ethernet cables and a switch.

- Connect the port4 interfaces of the cluster units together. You can use a crossover Ethernet cable or regular Ethernet cables and a switch.

- Power on the cluster units.

The units start and negotiate to choose the primary unit and the subordinate unit. This negotiation occurs with no user intervention.

When negotiation is complete you can continue.

To configure VDOM settings for the cluster

- Log into the CLI.

- Enter the following command to enable multiple VDOMs for the cluster.

config system global

set vdom-admin enable

end

- Log back into the CLI.

- Enter the following command to add the Eng_vdm VDOM:

config vdom

edit Eng_vdm

end

- Edit the port5 interface, add it to the Eng_vdm VDOM and configure other interface settings:

config global

config system interface

edit port5

set vdom Eng_vdm

set alias Engineering_external

set ip 172.20.12.143/24

next

edit port6

set vdom Eng_vdm

set alias Engineering_internal

set ip 10.120.101.100/24

end

end

To add a default route to each VDOM

- Enter the following command to add default routes to the root and Eng_vdm VDOMs.

config vdom

edit root

config router static

edit 1

set dst 0.0.0.0/0.0.0.0

set gateway 172.20.120.2

set device port1

end

next

edit Eng_vdm

config router static

edit 1

set dst 0.0.0.0/0.0.0.0

set gateway 172.20.120.2

set device port5

end

end

To configure VDOM partitioning

- Enter the

get system ha statuscommand to view cluster unit status:

For example, from the FGT_ha_2 cluster unit CLI:

config global

get system ha status

.

.

.

number of vcluster: 1

vcluster 1: work 169.254.0.1

Master:0 FG600B3908600825

Slave :1 FG600B3908600705

This command output shows that VDOM partitioning has not been configured because only virtual cluster 1 is shown. The command output also shows that the FGT_ha_2 is the primary unit for the cluster and for virtual cluster 1 because this cluster unit has the highest serial number

- Enter the following commands to configure VDOM partitioning:

config global

config system ha

set vcluster2 enable

config secondary-vcluster

set vdom Eng_vdm

end

end

end

- Enter the

get system ha statuscommand to view cluster unit status:

For example, from the FGT_ha_2 cluster unit CLI:

config global

get system ha status

.

.

.

number of vcluster: 2

vcluster 1: work 169.254.0.1

Master:0 FG600B3908600825

Slave :1 FG600B3908600705

vcluster 2: work 169.254.0.1

Master:0 FG600B3908600825

Slave :1 FG600B3908600705

This command output shows VDOM partitioning has been configured because both virtual cluster 1 and virtual cluster 2 are visible. However the configuration is not complete because FGT_ha_2 is the primary unit for both virtual clusters. The command output shows this because under both vcluster entries the

Masterentry shows FG600B3908600825, which is the serial number of FGT_ha_2. As a result of this configuration, FGT_ha_2 processes traffic for both VDOMs and FGT_ha_1 does not process any traffic.

- Change the Virtual Cluster 1 and Virtual Cluster 2 device priorities for each cluster unit so that FGT_ha_1 processes virtual cluster 1 traffic and FGT_ha_2 processes virtual cluster 2 traffic.

Since the root VDOM is in virtual cluster 1 and the Eng_vdm VDOM is in virtual cluster 2 the result of this configuration will be that FGT_ha_1 will process all root VDOM traffic and FGT_ha_2 will process all Eng_vdm traffic. You make this happen by changing the cluster unit device priorities for each virtual cluster. You could use the following settings:

| Device Priority | ||

| Host Name | Virtual Cluster 1 | Virtual Cluster 2 |

| FGT_ha_1 | 200 | 100 |

| FGT_ha_2 | 100 | 200 |

Since the device priority is not synchronized you can edit the device priorities of each virtual cluster on each FortiGate separately. To do this:

- Log into the CLI and note the FortiGate you have actually logged into (for example, by checking the host name displayed in the CLI prompt).

- Change the virtual cluster 1 and 2 device priorities for this cluster unit.

- Then use the

execute ha managecommand to log into the other cluster unit CLI and set its virtual cluster 1 and 2 device priorities.

Enter the following commands from the FGT_ha_1 cluster unit CLI:

config global

config system ha

set priority 200

config secondary-vcluster

set priority 100

end

end

end

Enter the following commands from the FGT_ha_2 cluster unit CLI:

config global

config system ha

set priority 100

config secondary-vcluster

set priority 200

end

end

end

|

|

The cluster may renegotiate during this step resulting in a temporary loss of connection to the CLI and a temporary service interruption. |

Since the device priority of Virtual Cluster 1 is highest for FGT_ha_1 and since the root VDOM is in Virtual Cluster 1, all traffic for the root VDOM is processed by FGT_ha_1.

Since the device priority of Virtual Cluster 2 is highest for FGT_ha_2 and since the Eng_vdm VDOM is in Virtual Cluster 2, all traffic for the Eng_vdm VDOM is processed by FGT_ha_2.

To verify the VDOM partitioning configuration

- Log into the FGT_ha_2 cluster unit CLI and enter the following command:

config global

get system ha status

.

.

.

number of vcluster: 2

vcluster 1: standby 169.254.0.2

Slave :1 FG600B3908600825

Master:0 FG600B3908600705

vcluster 2: work 169.254.0.1

Master:0 FG600B3908600825

Slave :1 FG600B3908600705

The command output shows that FGT_ha_1 is the primary unit for virtual cluster 1 (because the command output shows the

Masterof virtual cluster 1 is the serial number of FGT_ha_1) and that FGT_ha_2 is the primary unit for virtual cluster 2.If you enter the same command from the FGT_ha_1 CLI the same information is displayed but in a different order. The command always displays the status of the cluster unit that you are logged into first.

config global

get system ha status

.

.

.

number of vcluster: 2

vcluster 1: work 169.254.0.2

Master:0 FG600B3908600705

Slave :1 FG600B3908600825

vcluster 2: standby 169.254.0.1

Slave :1 FG600B3908600705

Master:0 FG600B3908600825

To test the VDOM partitioning configuration

You can do the following to confirm that traffic for the root VDOM is processed by FGT_ha_1 and traffic for the Eng_vdm is processed by FGT_ha_2. These steps assume the cluster is operating correctly.

- Log into the CLI by connecting to port2 using IP address 10.11.101.100.

You will log into FGT_ha_1 because port2 is in the root VDOM and all traffic for this VDOM is processed by FGT_ha_1. You can confirm that you have logged into FGT_ha_1 by checking the host name in the CLI prompt. Also the

get system statuscommand displays the status of the FGT_ha_1 cluster unit.

- Log into the GUI or CLI by connecting to port6 using IP address 10.12.101.100.

You will log into FGT_ha_2 because port6 is in the Eng_vdm VDOM and all traffic for this VDOM is processed by FGT_ha_2.

- Add security policies to the root virtual domain that allow communication from the internal network to the Internet and connect to the Internet from the internal network.

- Log into the GUI and go to System > HA > View HA Statistics.

The statistics display shows more active sessions, total packets, network utilization, and total bytes for the FGT_ha_1 unit.

- Add security policies to the Eng_vdm virtual domain that allow communication from the engineering network to the Internet and connect to the Internet from the engineering network.

- Log into the GUI and go to System > HA > View HA Statistics.

The statistics display shows more active sessions, total packets, network utilization, and total bytes for the FGT_ha_2 unit.